The Pursuit of Value Sharing: Aligning Financial Engines with Outcome

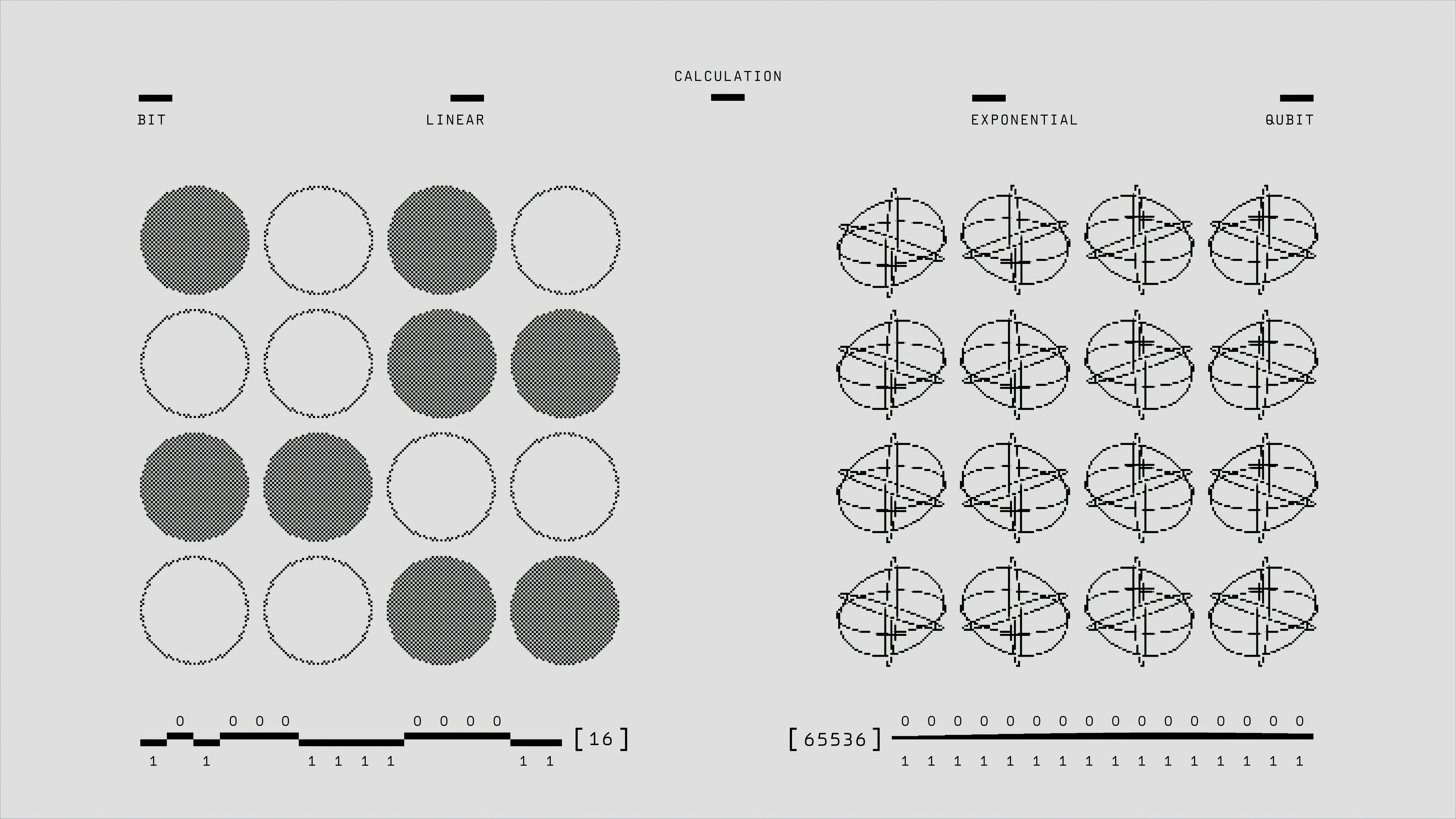

If access to compute defines the ceiling of what an organization can scale, then the business model *must* scale to meet the cost of that ceiling. This is where the pursuit of “value sharing” or “outcome-based revenue” moves from academic theory to operational necessity.

The logic is simple: if your platform enables a customer to realize ten-fold returns on their AI investment—whether through drug discovery, optimized financial modeling, or ten-fold productivity gains for their sales team—then the platform that made that return possible must secure a financial mechanism capable of supporting its own ten-fold-or-greater expenditure on the essential computational foundation.

From Subscription Fees to IP-Based Agreements

The era of simple, flat-rate subscription access to frontier models is giving way to more sophisticated monetization strategies designed to capture a sliver of the *value created*, not just the *compute consumed*. This echoes historical shifts on the internet, where initial access fees were replaced by models that captured value closer to the point of impact.. Find out more about AI model training immense non-linear costs.

For organizations looking to sustain an exponential infrastructure spend, the financial engine needs to be just as exponential. This means exploring models that can support the competition for the world’s most finite and expensive resource: top-tier compute capacity.

Here are the new economic models gaining traction in 2026:

Actionable Insight 2: Redefine “Value Delivered.” Stop thinking about AI monetization in terms of API calls or seat licenses alone. Start mapping customer-realized ROI directly against your internal compute amortization schedule. The pursuit of outcome-based revenue is fundamentally an effort to create a financial engine robust enough to compete for that necessary, expensive compute foundation.

Navigating the Adoption Gap: From Potential to Profitability

While the investment in infrastructure has been explosive, the market is now focused on the “Adoption Gap.” The promise of AI is immense, but for many organizations, the real productivity payoff is still taking shape. For 2026, the priority for the entire ecosystem is closing the distance between what AI *can* do and how people, companies, and countries are *actually* using it day-to-day.

If AI’s economic benefits accrue unevenly—favoring those who dominate semiconductor manufacturing, cloud infrastructure, and frontier model development—then the business model must actively work to distribute those gains in a way that fuels further adoption, or risk reinforcing a “winner-take-most” system.. Find out more about AI model training immense non-linear costs tips.

The ROI Pressure Cooker

Pressure is mounting across the board to demonstrate tangible returns. Reports indicate that a significant percentage of organizations that implemented generative AI saw little to no return on investment (ROI) despite substantial spending in 2025. Furthermore, CEOs are increasingly pressured to demonstrate clear AI returns, with many capital-intensive builds feeling the strain of debt-fueled infrastructure upgrades.

This environment demands a strategic focus on integration over mere capability:

For the consumer of AI services, the lesson is to prioritize implementation maturity. For the provider of AI services, the lesson is that your monetization structure must be a direct reflection of your customer’s ultimate success, or you risk underutilization and investor pushback.

Diversification Beyond Clouds: The Infrastructure Talent Layer

The complexity of managing AI compute today extends beyond just negotiating cloud contracts. It involves understanding the entire digital infrastructure stack. The sheer scale of investment—with hyperscalers projecting a 3.5x increase in data center capacity by 2030—is straining the availability of skilled personnel to manage, maintain, and optimize these complex environments. You need architects who understand hardware acceleration, energy efficiency, and data locality.. Find out more about AI model training immense non-linear costs overview.

Actionable Insight 3: Treat Infrastructure Strategy as a Multi-Year Contract Negotiation. The timeline-to-power for new data centers in the U.S. has ballooned from about one year to over seven years, intensifying strain and limiting supply growth. This means multi-year financial commitments for infrastructure must be secured now based on long-term, rather than immediate, demand signals. Review your long-term capital expenditure planning to account for this lead time.

Furthermore, as seen in the financial services sector, the source of AI funding is decentralizing, with non-IT departments (like Marketing and Finance) driving initiatives. Successful scaling now relies on a partnership between IT’s infrastructure understanding and the business unit’s direct revenue goals. This collaboration is crucial for ensuring that the immense cost of compute translates into prioritized, high-ROI projects.

Conclusion: The Financial Discipline of the Compute Century

As we stand here on January 23, 2026, the interplay between computational resources and financial scaling has never been clearer or more critical. Compute is the oil of the 21st century, but unlike oil, its extraction and refining process demands exponential, non-linear investment, and its supply is geographically and politically constrained.

The era of easy, cheap experimentation powered by readily available public cloud capacity is rapidly ending. The market is demanding demonstrable returns that validate the colossal AI investment risks being taken by the hyperscalers and the venture-backed startups alike.

Key Takeaways and Final Actionable Directives

The companies that win the next phase of the AI race won’t just be the best model builders; they will be the savviest resource managers. They will possess financial engines robust enough to compete for the world’s most finite and expensive resource, ensuring that the foundation of intelligence keeps getting stronger, faster, and more accessible for those who can truly monetize its impact.

What financial strategy are you implementing *this quarter* to ensure your compute ceiling supports your revenue ambitions? Share your thoughts on managing this crucial capex-to-revenue equation in the comments below—we need to learn from every successful strategy deployed in this competitive landscape.

To stay ahead of the curve on how infrastructure spending is reshaping the technology landscape, make sure you’re tracking reports on hyperscaler capex trends, which can offer leading indicators for supply availability. And for a broader view on how this tech investment is viewed across the economy, research on AI’s role in national GDP growth is essential context for your long-term planning.