Key Takeaways and Actionable Insights for the AI Ecosystem. Find out more about AI model inference latency requirements.

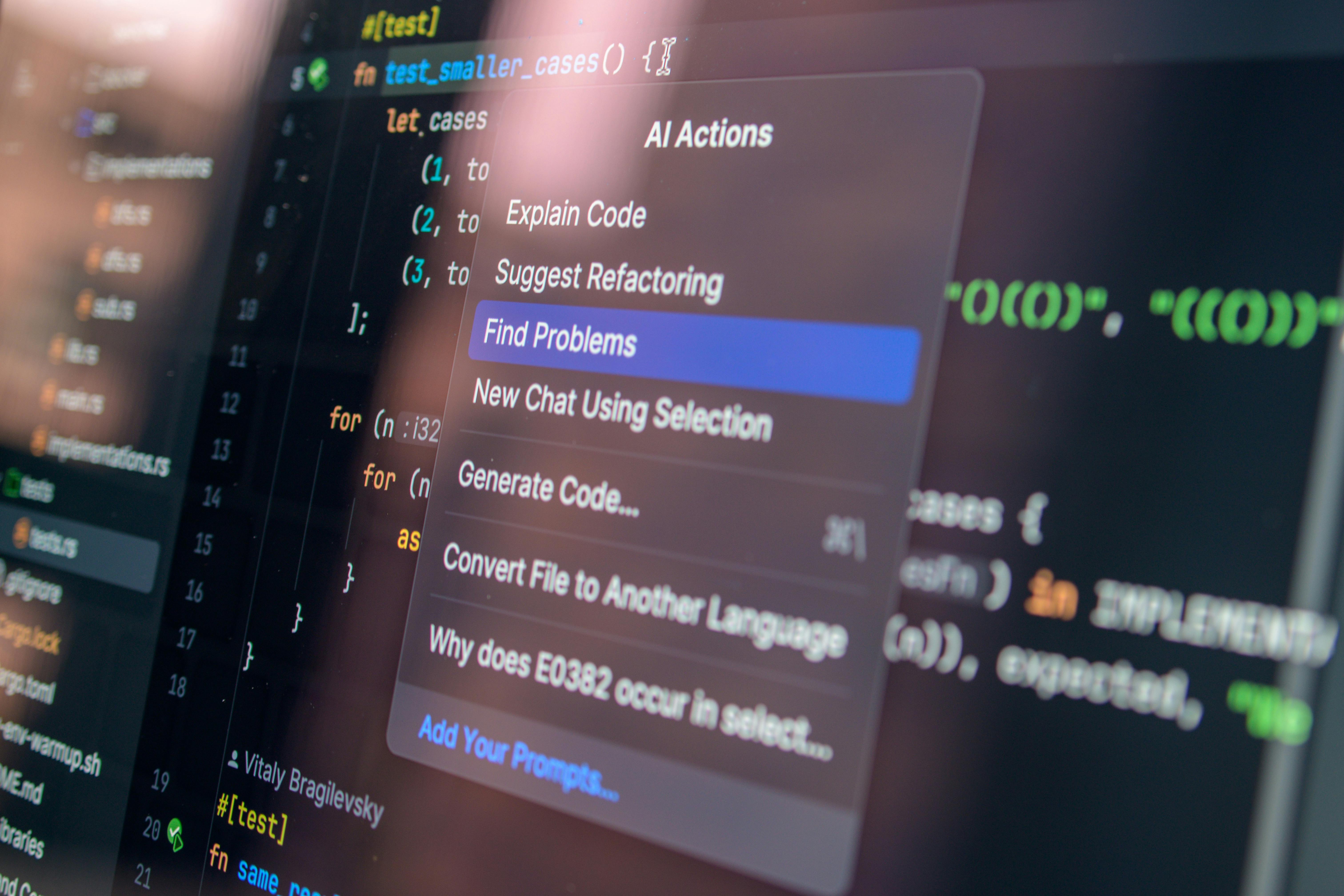

The current friction point between the AI development leader and its primary hardware supplier offers a vital lesson for every company building on cutting-edge AI: Inference is the new frontier of optimization. Here are the actionable insights derived from this complex relationship as of February 4, 2026:

- Diversify Your Inference Path: Relying on a single hardware vendor for *all* needs is a strategic risk. The market is moving toward specialized hardware (like chips emphasizing SRAM) for high-volume, low-latency tasks. Consider allocating a small, tactical percentage of your future compute budget to non-traditional accelerators.. Find out more about AI model inference latency requirements guide.

- Understand the Investment Nuance: Be highly skeptical of announcements involving “up to \$X billion.” Always seek clarity on whether the commitment is a firm purchase order, a binding financing agreement, or a conditional letter of intent. A gradual, phased investment is the new reality.. Find out more about AI model inference latency requirements strategies.

- The Neocloud Advantage is Maturing: Specialized cloud providers like CoreWeave and Nscale offer a genuine, financed alternative to hyperscalers. For any company looking to deploy large-scale proprietary models, these neoclouds now represent a mature, competitive option with high performance on the latest specialized hardware.. Find out more about AI model inference latency requirements overview.

- The Internal Chip Clock is Ticking: Major players are not bluffing about custom silicon. With OpenAI targeting mass production by late 2026, companies relying on third-party generalized chips for inference may find their cost structure rapidly outpaced by rivals with bespoke hardware. Start evaluating custom AI accelerator design now.

This entire dynamic—from the latency demands of a coding assistant to the \$20 billion investment being finalized—proves one thing: the pursuit of ever-smarter AI requires an equally relentless pursuit of faster, more efficient hardware. The reign of the singular silicon supplier is being challenged, not by a direct replacement in training, but by the inescapable *inference imperative*. What architectural shift are you prioritizing in your own operational stack for the second half of 2026? Let us know in the comments below.