From Content Commoditization to AI Commoditization: The New Value Chain of Digital Context

The technological landscape is perpetually defined by the removal of bottlenecks, a pattern observable across centuries of industrial evolution. The current, seismic shift driven by Generative Artificial Intelligence is not an exception; rather, it is an acceleration of the arc that digitized content industries first traced. As the foundational models themselves face inevitable commoditization pressure, the enduring strategic battle is crystallizing around the control of the user interface, the orchestration of complex processes, and the underlying physical infrastructure required to serve global intent in real time.

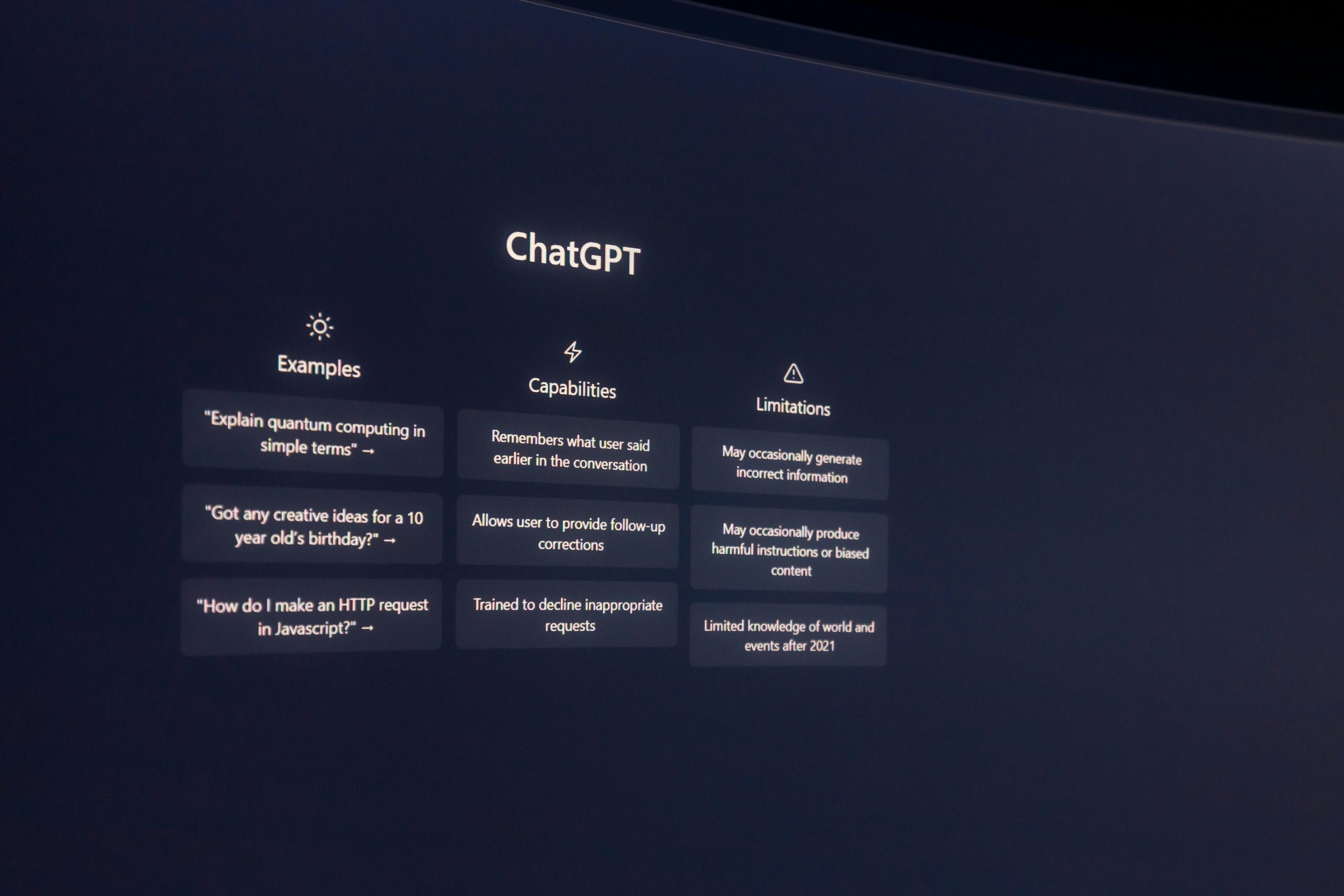

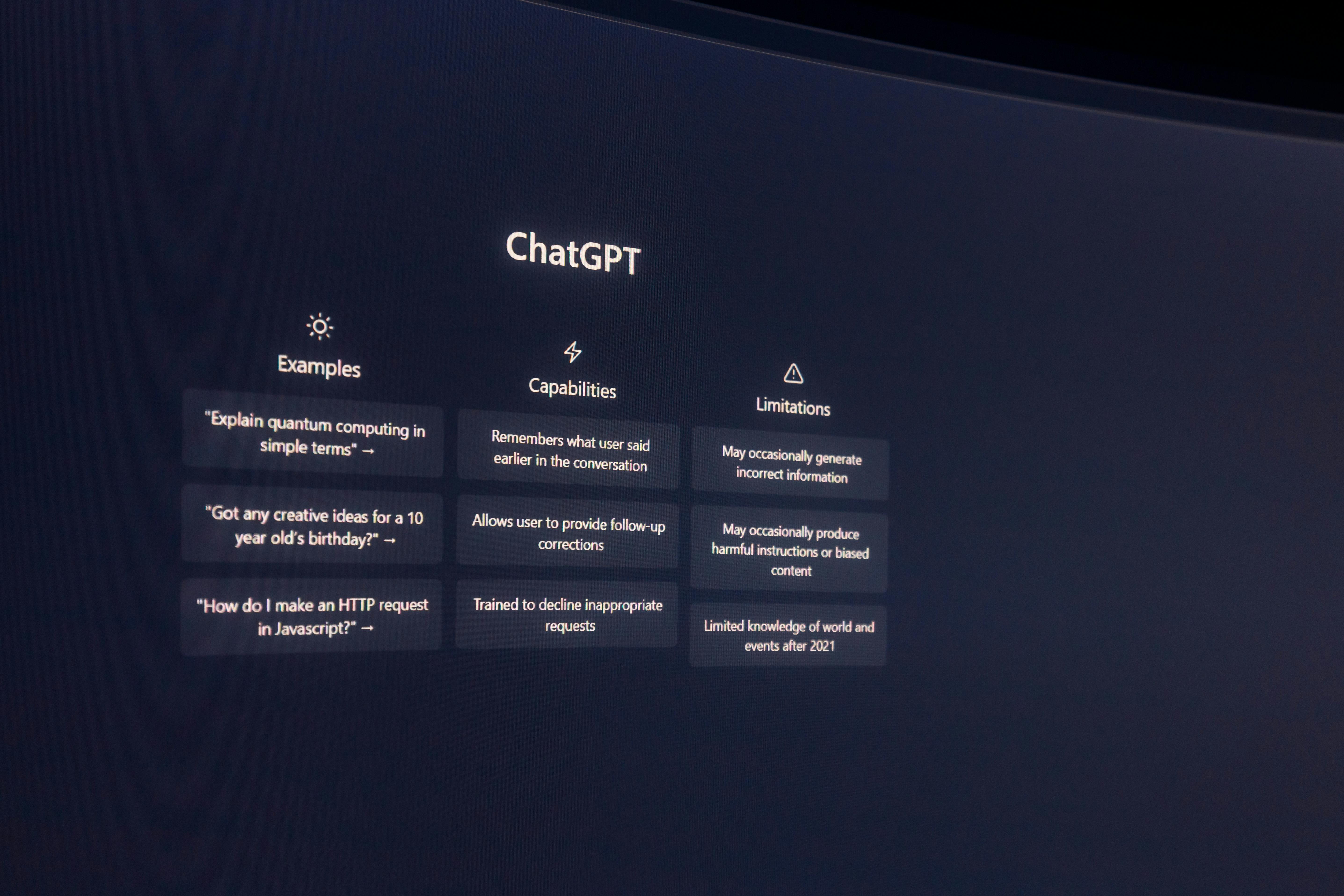

This moment is acutely captured by the immediate, tactical maneuvers of the current leaders. The November 17, 2025 launch of ChatGPT Group Chats by OpenAI is a strategic shot across the bow aimed directly at Meta Platforms. It leverages a feature deeply embedded in Meta’s core communication utility—shared, synchronous conversation—and positions it within the generative AI paradigm. Crucially, this move highlights the central trade-off of the age: Meta’s commitment to end-to-end encryption, a hallmark of its messaging platforms like WhatsApp, simultaneously serves as a strategic defense against this type of AI integration while also serving as a self-imposed barrier to an immediate, comparable competitive response in the AI interface layer. The value proposition is now inextricably linked to the product trade-offs platforms are willing—or forced—to make.

The Historical Precedent of Digitization Impacting Legacy Industries

The narrative arc of media—from newspapers to music to film—provides the most reliable template for understanding the disruption currently underway in the information and knowledge-work sectors. Historically, any industry reliant on a physical or administrative constraint—the printing press for replication, record store shelf space for distribution, or broadcast spectrum for reach—was rendered vulnerable once the internet digitally dissolved that constraint. The initial result was a predictable period of value destruction, as the old incumbents fought to maintain the relevance of the now-unbottlenecked components (e.g., an article’s text, an album’s audio file).

What followed this initial chaos, however, was the rise of new, digitally native intermediaries that established more efficient, software-defined bottlenecks. Google mastered the bottleneck of *discovery* amid abundance; Netflix mastered the bottleneck of *curation and delivery* for entertainment content. The lesson is that value does not disappear; it migrates to the point of control in the *new* value chain.

The AI upheaval is following this exact trajectory. The model weights themselves—the massive, trained artifacts of the AI era—are increasingly subject to commoditization. The rapid iteration cycles, the rise of capable Small Language Models (SLMs) optimized for cost efficiency, and the eventual normalization of open-source releases suggest that the mere ability to generate high-quality text or imagery on command will cease to be a defensible advantage. The value is already moving elsewhere.

The AI Unbundling and the Value of Process vs. Output

The concept of the “AI Unbundling,” first articulated when generative models began to atomize knowledge work, is now entering its maturation phase. Pre-AI, the value chain of knowledge work was largely bundled: an individual would search (discovery), synthesize facts (understanding), draft (creation), and format (delivery). Generative AI is deconstructing this process, making the final output—the document, the snippet of code, the image—the cheapest and most easily reproducible element. This output is rapidly becoming a low-value commodity, deliverable by an array of competing services, perhaps even native within an operating system or productivity suite.

The decisive shift in value capture is now firmly on the process. Superior AI is no longer defined by the eloquence of its prose but by its ability to reliably coordinate complex, multi-step tasks to achieve a desired outcome. This necessitates agentic capabilities: the ability to fact-check against external, authoritative sources, integrate with enterprise data via connectors, utilize external tools (plugins), and manage the sequential dependencies between these sub-processes. The platform that masters this orchestration—the true coordination layer required for reliable, high-fidelity execution—will control the next frontier of digital productivity, moving far beyond the simple promise of a well-written response.

Platform Architecture and the New Bottlenecks

The strategic foundation of the previous decade in consumer technology was built upon the aggregation of unique, proprietary user data silos. These differentiated datasets fed superior recommendation engines, cementing network effects that became formidable moats. The current era signals a fundamental architectural divorce from this paradigm, driven by the economics of frontier model development.

The Shift from Data Silos to Compute Primacy

While data remains essential for fine-tuning and maintaining competitive relevance, the single most defining constraint on next-generation AI development and deployment has become the sheer compute capacity required. Training and serving the most sophisticated, multi-modal, and personalized models demands computational resources that are finite and incredibly expensive. This is starkly evidenced by the massive capital expenditures reported across the hyperscalers throughout 2024 and 2025, focused on securing the most advanced semiconductor fabrication access and building bespoke data centers optimized for these extreme, AI-specific workloads.

This shift favors a new class of incumbent whose advantages are rooted not in software network effects alone, but in mastery over physical reality: deep pockets for long-term infrastructure buildout, expertise in global semiconductor supply chains, and the capacity for massive, sustained capital deployment. The valuation ascent of chip designers serves as the most visible barometer of this shift in strategic scarcity.

The Role of Infrastructure Buildout and Power as a Limiting Factor

The necessary scale of this compute buildout forces technological ambition to confront the sobering constraints of the physical world. The energy requirements for global-scale AI inference and training are staggering, making power—once a nearly negligible operational input—the most significant limiting factor in deploying the next iteration of AI services. The necessity for massive, dedicated, and reliable power generation to fuel these specialized data clusters brings infrastructural realities to the forefront that were previously abstract to software architects.

This massive, required investment in tangible, physical infrastructure—a phenomenon echoing historical technological revolutions described by economists like Carlota Perez—is itself a source of value creation and economic stimulus. The optimistic view suggests that this “Perez-style infrastructure buildout”—the new power grids, the specialized cooling systems, the expanded fiber networks—will spill over, generating broader economic productivity. Companies that treat power generation and optimized hardware not as overhead but as a core, strategic differentiator are positioned to manage the physical limits of the digital frontier, a necessary precursor to sustained software superiority.

Concluding Thoughts: Navigating the Next Iteration Cycle

The overarching theme for all platform players in this new landscape—from the incumbent communications giant like Meta to the emergent challenger like OpenAI—is the imperative to make and manage agonizing product trade-offs. The short-term compromises made today will determine the viability and defensibility of the platform tomorrow.

Product Trade-offs as the Ultimate Determinant of Long-Term Platform Success

Nowhere is this tension more evident than in the conflict between data access and user privacy. The encryption debate is the purest distillation of this zero-sum game. To fuel next-generation, highly personalized AI, platforms require context-rich insights derived from user interactions. For Meta, this means a direct confrontation between its established promise of end-to-end encrypted communication and its burgeoning need to monetize AI through ad targeting. The impending policy shift on December 16, 2025, to analyze user chats with Meta AI for ad personalization, while exempting regions like the EU, starkly illustrates this choice: sacrificing short-term user trust in key markets for a massive, immediate data advantage for their AI models.

Conversely, OpenAI’s deployment of ChatGPT Group Chats is an aggressive move to capture the *social context* layer where Meta is constrained by its own success in protecting that context. The ability to move faster in the product interface layer, even if it means navigating different philosophical trade-offs (such as the data utilized by enterprise connectors versus consumer chats), is now a key competitive lever.

Furthermore, the monetization trade-off is critical. As models commoditize, platforms must choose between rapid, high-volume, potentially low-relevance ad saturation (the legacy internet model) and maintaining long-term user trust through superior, context-aware ad relevance derived from deeper AI integration. Google’s pivot to conversation-based targeting in its AI Mode, where ads are placed based on the entire chat flow rather than just a single query, represents an attempt to create a new, more expensive form of digital network effect based on conversational depth. The failure to articulate a clear narrative for how a compromise—say, between absolute privacy and deep surveillance—serves the user’s ultimate utility will inevitably lead to fragmentation and user migration.

A Forward Look at Potential Resiliency Gaps in the AI Ecosystem

As capital floods into the foundational model and infrastructure layers, systemic risks are being concentrated rather than distributed. The hyper-reliance on a small cohort of semiconductor fabricators and specialized chip suppliers creates a single point of failure for the entire industry’s growth trajectory, a fragility that the legacy internet, with its more distributed software layers, did not possess to the same degree. Geopolitical instabilities intersecting with this hardware concentration create unprecedented systemic risk.

Ironically, the pursuit of the most advanced digital capabilities is leading to a practical consolidation into a handful of hyper-scaled, physically centralized environments. The resilience that characterized the open web—where data could theoretically be routed around any single point of failure—is being replaced by a structure where a handful of infrastructure providers hold disproportionate systemic leverage. The companies that successfully navigate the remainder of this decade will be those that best manage these physical and philosophical trade-offs, shipping meaningful product advancements that capture and retain the collective digital context of their users while building defensibility not just in software prowess, but in infrastructural reality.