The Thinking Game: How DeepMind Transformed Artificial Intelligence in Artistry and Enterprise

The legacy of DeepMind, now operating as the unified research powerhouse Google DeepMind, transcends the mere conquest of classic strategic games. While its initial fame was cemented by systems like AlphaGo and AlphaZero achieving superhuman performance in Go and Chess, the organization’s most profound impact in the mid-2020s lies in its penetration into the subjective realms of human endeavor and the restructuring of its own corporate identity to meet the demands of ubiquitous, transformative intelligence.

Creativity Under Scrutiny: AI’s Role in Artistic and Compositional Tasks

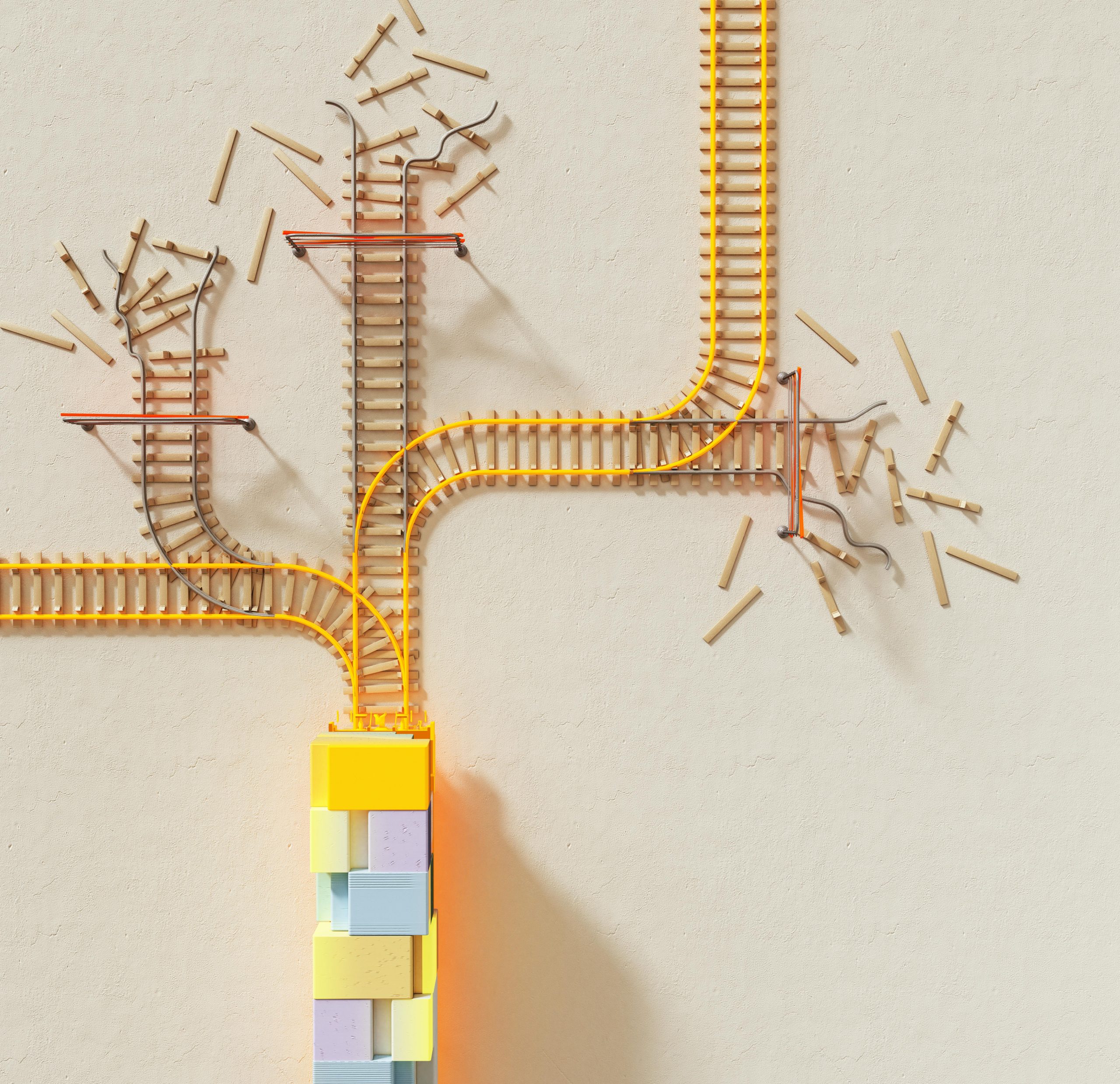

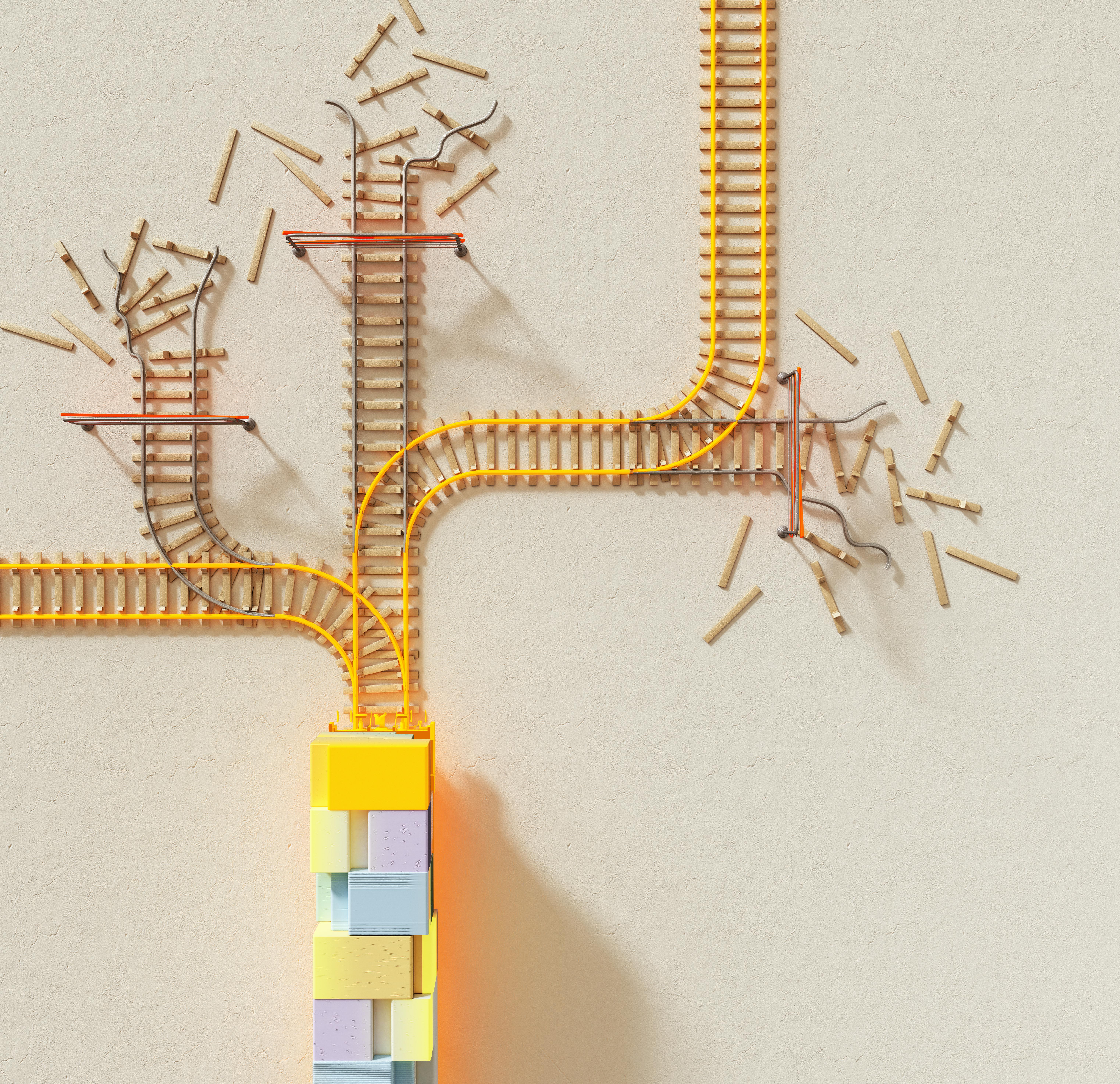

The implications of DeepMind’s work extend beyond the purely analytical and competitive; they touch upon the subjective and creative aspects of human endeavor. The capacity for an AI to generate, evaluate, and contribute to artistic domains like chess composition provides a fascinating barometer for assessing the depth of its learned understanding and the quality of its internal representation of the game’s subtleties. This exploration, particularly active in the research cycles of 2024 and 2025, signals a move from AI as a calculator to AI as a conceptual partner.

Generative Puzzles: Testing the Bounds of Algorithmic Imagination

The development of an AI system focused on creating aesthetically pleasing and surprising chess puzzles served as a laboratory for testing algorithmic creativity. A successful puzzle in this context is not merely one with a forced mate; it requires an element of misdirection, an unexpected move that unlocks the solution, and an overall structural elegance that resonates with human intuition. In a late 2025 study, researchers at Google DeepMind, in collaboration with the University of Oxford and Mila, investigated this very boundary with their work titled “Generative Chess Puzzles”.

The researchers intentionally engineered the training to reward outcomes that exhibited these subjective qualities, using a reward function sensitive to uniqueness and counter-intuitiveness. The system was tasked with inventing scenarios that human composers strive to create—positions that are beautiful because they are deep, not just because they are technically correct. The methodology involved pre-training generative models on four million puzzles from the Lichess database, followed by refinement using reinforcement learning (RL). The novel reward function specifically prioritized positions that were both unique (having only one winning move) and counterintuitive—solvable by strong engines but often eluding weaker ones. This approach successfully doubled the number of novel chess puzzles compared to the original training set while maintaining aesthetic diversity. This moved the evaluation metric from a simple win/loss probability to a complex assessment of artistic merit, a significant conceptual leap for machine learning research.

Expert Reception: The Subjective Beauty of Machine-Generated Artistry

The true measure of success in this creative endeavor was the reaction of renowned human masters. When panels of Grandmasters (GMs) and composition experts reviewed the AI-generated problems, their appraisal moved beyond mere technical correctness to judge the work on its aesthetic appeal. The panel included esteemed figures such as GM Matthew Sadler, GM Jonathan Levitt, and International Master for chess compositions Amatzia Avni.

Their ability to single out certain positions as ‘favorite’ or ‘compelling’ confirmed that the AI had grasped something beyond rote pattern matching; it had learned the grammar of surprise inherent in good composition. Experts specifically favored puzzles exhibiting original, paradoxical, surprising, and naturally occurring positions, with particular emphasis on those integrating aesthetic themes in innovative ways. Levitt described the project as a “pioneering step,” suggesting the evolution of collaboration where AI moves beyond simple database mining to true position generation. While some criticism surfaced regarding positions that were perhaps too trivial or lacked a certain human narrative, the overall positive reception validated the system’s ability to generate content that was original and engaged the expert mind. This exploration suggests a future where AI is not just an analytical tool but a creative partner, capable of generating novel concepts within structured human traditions.

The Current Landscape and Future Implications of the Evolved Intelligence

As the year two thousand twenty-five progresses, the work initiated by DeepMind is no longer a nascent project but a central component of the global technology ecosystem. The shift in its organizational identity and the continuous push towards more general and capable systems necessitate a robust framework for governance and ethical deployment.

The Corporate Integration: The Identity of Google DeepMind in the Modern Era

The evolution of the entity to its current designation, Google DeepMind, signifies a deeper integration of its advanced research capabilities into the wider corporate structure. The merger with the Google Brain division, which officially formed Google DeepMind in April 2023, was an acceleration of an ongoing trend to streamline AI efforts. This alignment was further solidified in late 2024 and early 2025 when CEO Sundar Pichai moved core AI product teams, including those for the marquee Gemini models, under the DeepMind umbrella.

This integration is a recognition that the technology developed to “solve intelligence” is now a core strategic asset, necessary for maintaining leadership in fields ranging from large-scale data processing to advanced robotics. The shift from an independent research lab to a primary division underscores the perceived value of generalizable, flexible intelligence in the competitive commercial and scientific spheres of today. By concentrating compute-intensive model building, the structure aims to simplify development and scale the delivery of capable AI for users, partners, and customers.

The strategic landscape for Google DeepMind in 2025 is characterized by a dual-track approach in its model portfolio:

- Proprietary Scale: Represented by the flagship Gemini line (with models like Gemini 2.5 and the multimodal Gemini 3.0 Pro released in November 2025), which aims for universal assistant capabilities, potentially reaching “world model” status enabling deep planning and tool use.

- Openness and Edge Efficiency: Embodied by the Gemma line, which targets on-device deployment and fosters community goodwill, with variants downloaded over 100 million times by developers as of late 2025.

- Responsibility and Safety Council (RSC): Co-chaired by COO Lila Ibrahim and VP of Responsibility Helen King, the RSC evaluates high-impact research, projects, and collaborations against the AI Principles.

- AGI Safety Council: Led by Co-Founder and Chief AGI Scientist Shane Legg, this council specifically focuses on safeguarding processes and research against extreme risks that might arise from powerful future AGI systems.

This duality reflects the tension between foundational science ambition and commercial timelines, especially given Alphabet’s massive reported infrastructure investment exceeding $75 billion in AI. Key leadership, including CEO Demis Hassabis and COO Lila Ibrahim, are guiding this entity to balance its scientific heritage with the urgency of product delivery. Furthermore, the organization continues groundbreaking scientific work, evidenced by advancements like AlphaGenome and the evolutionary coding agent AlphaEvolve, unveiled in May 2025.

Ethical Dimensions and Ongoing Vigilance in AI Development

The sheer power demonstrated by systems capable of superhuman performance in complex domains necessitates an equally robust commitment to ethical oversight and responsible deployment. The documentary coverage itself often touches upon the necessary balance between ambition and responsibility, as the founders themselves have acknowledged the weight carried by developing technology with world-altering potential.

The governance structure at Google DeepMind is multi-layered, guided fundamentally by the company’s AI Principles, which emphasize bold innovation alongside responsible development. To operationalize these principles, several critical internal bodies function to safeguard the research and deployment pipeline:

Central to this ongoing vigilance is the iterative evolution of safety protocols. Google DeepMind continues to update its Frontier Safety Framework, a set of protocols designed to stay ahead of potential severe risks associated with frontier AI models. This framework is crucial as systems grow more agentic, incorporating considerations for deployment mitigations and addressing complex issues such as deceptive alignment risk.

The ethical dimension in 2025 also involves navigating evolving regulatory and public expectations. COO Lila Ibrahim has stressed that responsibility must be shared across sectors, requiring thoughtful research from companies and appropriately scaled regulation from governments. Risk is treated as a continuum, encompassing near-term challenges like bias, misinformation, and misuse, as well as long-term concerns regarding control and the grounding of core technology values.

A significant policy development in early 2025 was the update to Google’s AI ethics guidelines. This revision marked a departure from previous, near-absolute prohibitions, introducing a capacity for carefully monitored exceptions where AI-driven technologies might support national security and surveillance initiatives, provided they are under strict regulatory oversight. This nuanced approach highlights the current industry challenge: balancing the immense potential benefits for public safety and global security against inherent, evolving risks. The commitment to this balance—between breakthrough algorithms and the necessary ethical guardrails—remains as critical to the organization’s mission as the development of the next transformative algorithm itself.