The Concept of Closing the Loop in Corporate Sustainability Initiatives

The application of AI for internal optimization—whether through thermal management or supply chain circularity—cements the argument for gaining, and keeping, “social permission.” This concept is vital. It means the industry isn’t just claiming to deliver external societal value (like better medical diagnostics or advanced climate modeling); it’s demonstrating that it is actively employing its most advanced tools to manage the environmental consequence of its own technological appetite. It’s a declaration of responsibility that is essential for continued, unencumbered expansion.

When an executive can point to their operational dashboard and show, in real-time, how their machine learning models are actively reducing the PUE of their global data center network, they move the conversation away from abstract environmental risk and toward verifiable performance improvement. This is about building a more responsible and circular approach to technological expansion. It reframes the narrative:

- From Drain to Dial: AI shifts from being an abstract, uncontrolled energy drain to a fine-tuned, controllable dial on operational efficiency.

- Credibility through Application: Using the technology to fix self-inflicted problems builds more credibility than simply reporting on external benefits.. Find out more about AI social permission energy consumption dialogue.

- Proactive Compliance: Implementing self-regulating systems sets a high bar, potentially making external mandates less necessary or even less effective.

The message to the market is this: We are not just waiting for regulations to tell us how to be green; we are using the cutting edge of our science to make ourselves green first. This is a far more powerful stance when facing public and political headwinds.

Implications for the Broader Technological Ecosystem and Future Regulation

The statements advocating for this dual responsibility are not isolated corporate platitudes; they are functioning as a significant bellwether for the entire technology sector as we enter late 2025. With AI development now intrinsically linked to national competitiveness, resource security, and international leverage—especially in geopolitical competition—this call for internal accountability resonates loudly across boardrooms and, crucially, in legislative chambers the global race for AI dominance.

The framework being established sets an emerging, informal standard. Other companies will invariably be measured against the leaders who demonstrate this self-regulation, whether formally by investors or informally by public perception.

Shifting Industry Focus from Pure Capability to Responsible Deployment

The challenge being issued to the ecosystem is profound: The next defining leap in artificial intelligence will not be measured *only* by a new benchmark score or a higher parameter count. It will be measured by its ability to operate within an accepted, and perhaps shrinking, societal energy budget. If a breakthrough requires ten times the power of its predecessor, it might be a scientific marvel, but it’s an economic and regulatory liability.

This mindset is catalyzing a renewed focus on algorithmic efficiency, as mentioned earlier, pushing for research into sparse models, knowledge distillation, and radically more efficient hardware utilization. The goal is to perform the same, or better, tasks using fewer computational cycles. It’s a direct consequence of policymakers and executives understanding that an unmanaged energy demand curve is an existential threat to the industry’s growth trajectory.. Find out more about AI social permission energy consumption dialogue tips.

The paradigm is shifting: Innovation will be rewarded not just for being large and powerful, but for being lean and effective. Think of the automotive analogy: progress isn’t just about building a faster engine; it’s about achieving that speed while using a fraction of the fuel. As one analyst noted, some companies have seen their chips become 10,000x more energy efficient between 2016 and 2025, suggesting that while historic rates of improvement won’t last forever, significant gains are still being made .

Anticipating Public and Governmental Scrutiny Over Resource Allocation

As AI adoption permeates everything from medical diagnosis to financial markets, the energy consumption of data centers is rapidly becoming a visible political issue. Governments, already under pressure to maintain grid stability and meet increasingly strict climate pledges, are naturally inclined to scrutinize high-consumption sectors. This is where the call for “social permission” acts as a crucial preemptive measure.

It is an industry attempt to self-regulate and demonstrate inherent social utility *before* external bodies impose potentially restrictive caps or mandates on energy procurement for computing purposes. For example, recent federal actions in the US have focused on streamlining permitting for data centers, leaning into dispatchable baseload energy sources to fuel expansion, while simultaneously calling for grid upgrades . This regulatory environment is a direct response to visible power demands.. Find out more about AI social permission energy consumption dialogue strategies.

The dialogue around AI energy use is, therefore, an attempt to shape the regulatory future. The industry is attempting to establish the utility-for-energy trade-off—the measure of benefit derived per kilowatt-hour consumed—as the primary benchmark for continued operational freedom. If the industry can demonstrate that the marginal utility of a new AI cycle (e.g., finding a new life-saving drug compound) far outweighs its energy cost, the argument for unrestricted growth is strengthened.

Consider the political risk of unchecked growth. As one senator stated, the public is weary of paying higher energy bills to fund an industry’s “incessant hunger for electric power” while new fossil fuel generators are built nearby . By focusing on internal efficiency and closing the loop, the industry seeks to neutralize this argument by showing that the growth is being managed responsibly, not just subsidized by the public grid.

Actionable Insights for the Next Wave of AI Development

This is where the rubber meets the road. The abstract discussion must translate into actionable steps for those building, deploying, and regulating AI systems. For developers and engineers, the mandate is to treat computational efficiency with the same fervor as model accuracy.. Find out more about AI social permission energy consumption dialogue overview.

Practical Takeaways: How to Drive Efficiency Today

- Prioritize Algorithmic Pruning: Do not treat the largest model as the default solution. Actively research and deploy techniques like knowledge distillation to create smaller, faster, and less power-hungry versions of successful models. Efficiency should be a core requirement in your model selection process, not an afterthought.

- Embrace Hardware-Aware Software Design: Understand the specific energy profiles of your target hardware (GPUs, TPUs, etc.). Writing code that optimizes for the specific architecture’s latency and power envelope can yield massive gains compared to generic code. This is the realm where even marginal software improvements (like those seen with TensorRT-LLM) scale into megawatts saved best practices in hardware-aware development.

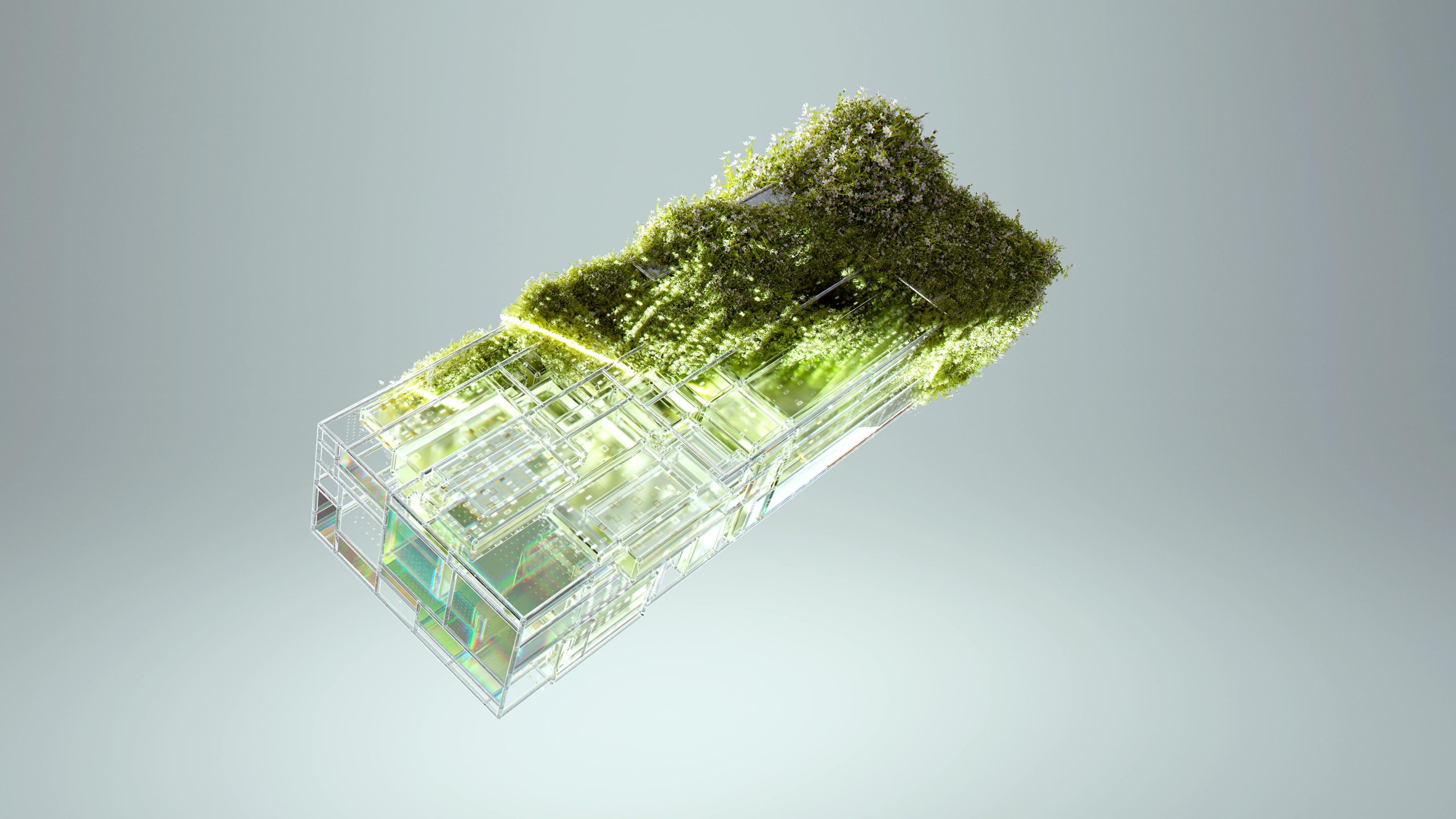

- Mandate Closed-Loop Thinking in Infrastructure: If you are designing or procuring data center space, demand metrics on water reuse (e.g., percentage of recycled water usage) and packaging circularity (e.g., reusable shipping containers) as non-negotiable KPIs alongside traditional uptime metrics. This forces suppliers to innovate outside the energy-only focus.. Find out more about Using AI for internal data center energy optimization definition guide.

- Calculate and Publish Utility-per-Unit: Move beyond just reporting total PUE. Begin calculating and reporting the energy consumed *per meaningful unit of output*—kilowatt-hours per successful medical diagnosis, per high-quality translated document, or per unit of scientific simulation completed. This is the true measure of utility-for-energy trade-off.

Furthermore, for business leaders, the time for passive observation is over. The regulatory environment is solidifying around resource allocation. Companies that can proactively demonstrate high efficiency and a circular economy strategy will secure a competitive edge and a stronger position for future lobbying and permitting efforts. Consider the long-term value of investing in advanced cooling technologies understanding advanced cooling technologies. While the upfront cost may seem higher, the operational savings, reduced water dependency, and enhanced public perception offer a significant return on investment beyond just the PUE chart.

Conclusion: The Feedback Loop that Defines the Next Decade

The dual role of AI—as the consuming behemoth and the optimizing savior—defines the technological tightrope walk of the mid-2020s. As of December 2, 2025, the trajectory shows that the problem of energy consumption is accelerating faster than many anticipated, making the solution side of the equation an urgent necessity, not a future luxury. The industry is at an inflection point.. Find out more about Algorithmic efficiency vs large model power consumption insights information.

Will we allow AI to simply become the world’s biggest utility customer, or will we force it to become the world’s most powerful efficiency tool? The evidence suggests the most forward-thinking leaders are choosing the latter. They are demonstrating that the most advanced thinking in machine learning should be applied not just to generating novel outputs, but to generating novel efficiencies in the hardware and infrastructure that make it all possible. This inward application of intelligence—the closing of the loop—is the only path to maintaining both the pace of innovation and the necessary social permission to continue building the future.

What efficiency metrics are you tracking inside your own compute infrastructure? Are you measuring utility per output, or just total consumption? Let us know in the comments how your organization is tackling the dual challenge of AI growth and energy responsibility.