Contrasting Models: Enterprise Adoption Versus Speculative Buildout

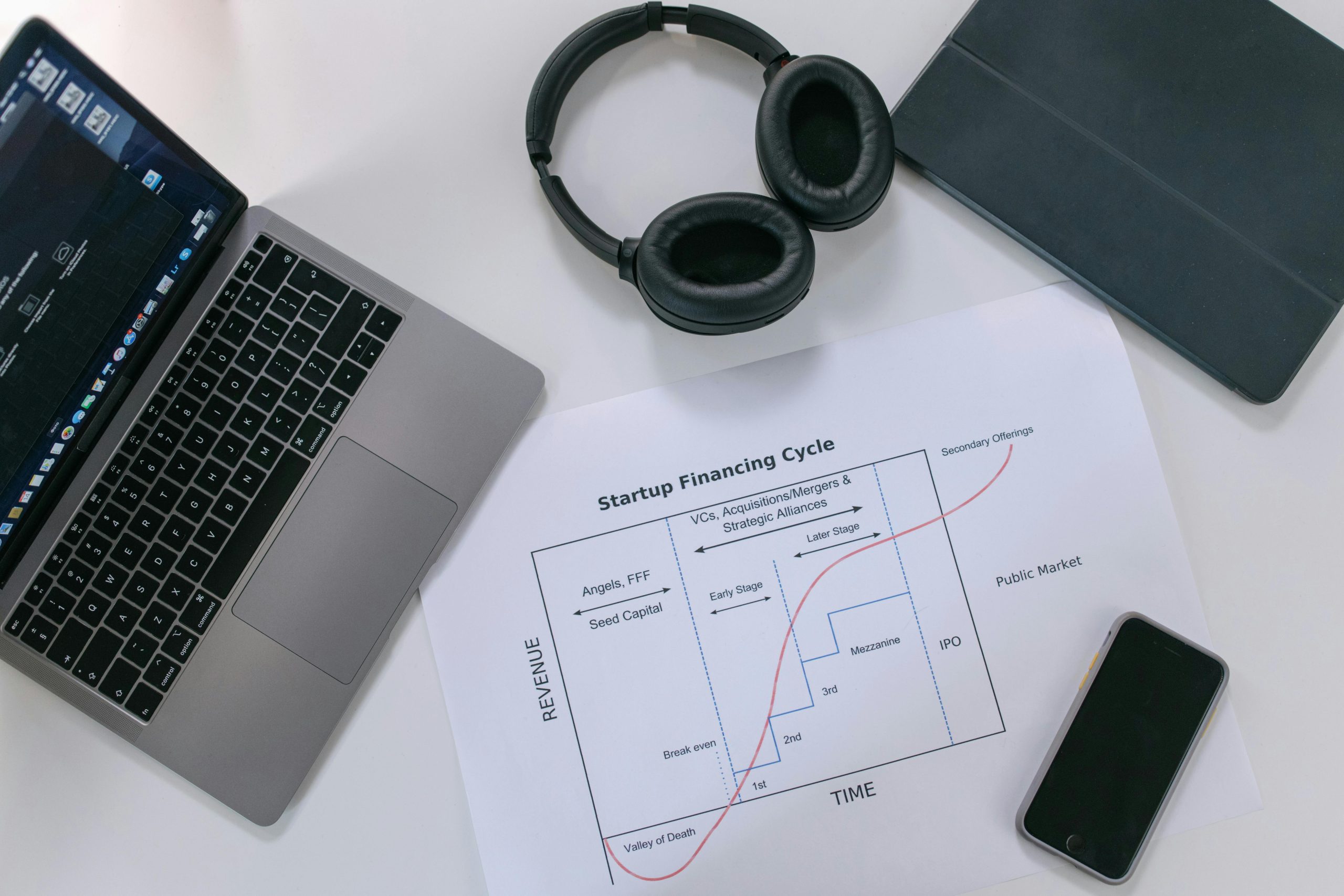

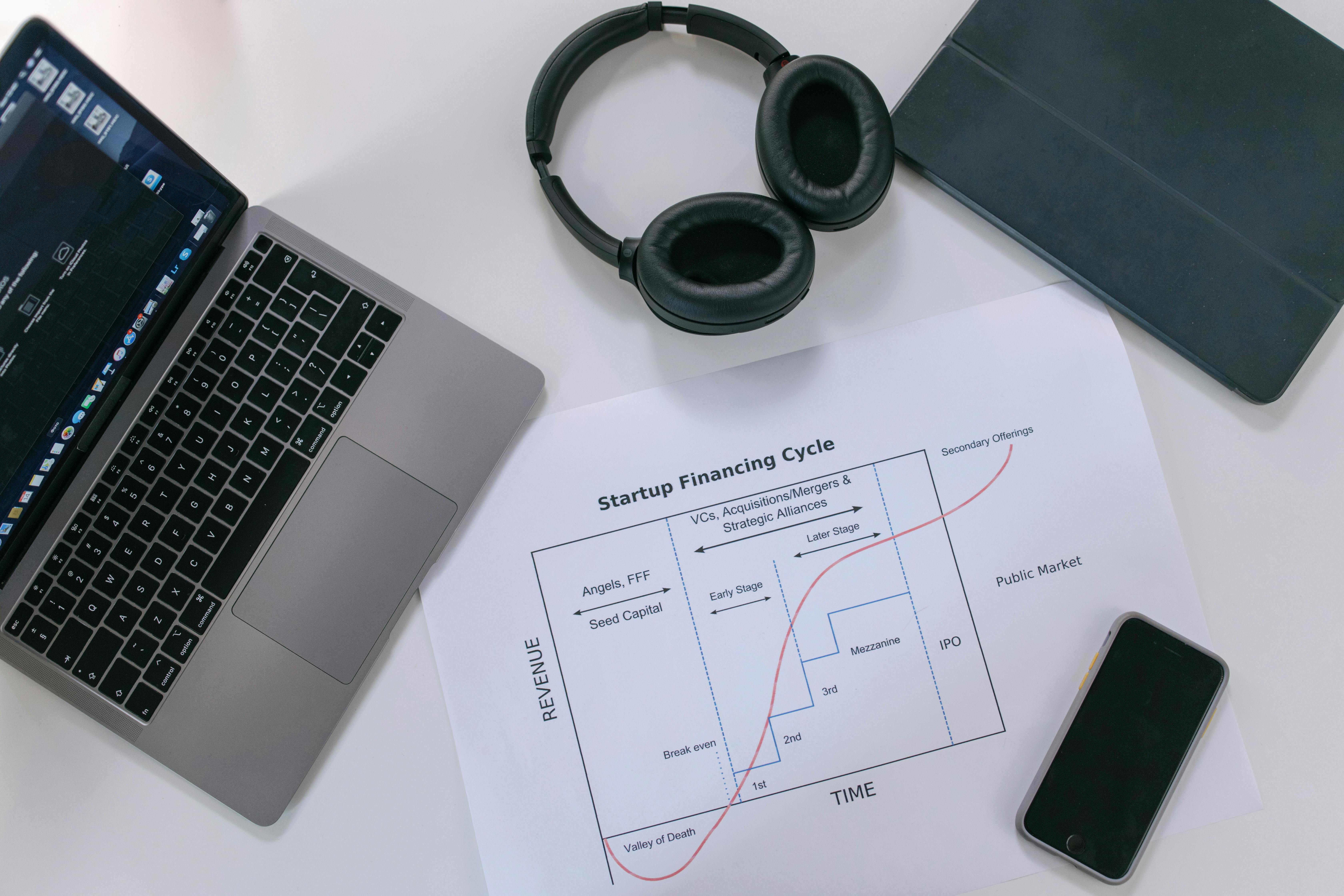

The market is currently splitting into two very distinct camps when it comes to scaling and revenue generation, and this divergence is where the financial stress tests are being run. One model is proving its mettle by integrating directly into the machinery of commerce; the other is banking on a massive, world-changing adoption curve that hasn’t fully materialized yet.

The Revenue-Driven Enterprise Adoption Path

The resilient players are the ones embedding their AI directly into existing enterprise workflows. They aren’t waiting for universal consumer adoption; they are selling measurable, immediate value to businesses today. This often looks like consumption-based billing or seat licensing tied directly to a quantifiable business outcome—say, a 30% reduction in document processing time or a 5% saving on procurement spend, as one startup, Procure AI, is showing for its clients.

Data suggests this is the more pragmatic path in 2025. A recent industry survey indicated that while 88% of organizations report regular AI use, the majority—about two-thirds—are prioritizing internal use cases before tackling customer-facing applications. Why? Because internal efficiency gains are easier to track, provide faster ROI, and build internal expertise in a controlled environment. This model keeps the operational burn rate in check because the revenue is earned by servicing existing, proven demand, not speculative future demand.

The Speculative Model and Its Fixed Cost Trap. Find out more about AI investment cycle peak indicators.

The model currently experiencing financial strain is the one built on the speculative promise of near-universal, rapid adoption of generative tools across *everything*. This approach requires building out world-class computational capacity—the $1.4 trillion in compute commitments OpenAI reportedly has in its sights—based on the *promise* that billions of users will soon translate into billions in sustainable revenue.

The financial gap highlights the volatility of this approach. The upside is theoretically limitless—a new platform for human interaction. But the downside is a massive, non-cancellable fixed cost structure (the cloud contracts) that must be serviced month after month while waiting for that theoretical adoption curve to fully mature. For those operating in this speculative lane, the path forward isn’t just about raising more capital; it’s about an aggressive, forced pivot toward securing more of the profitable, real-world enterprise adoption revenue to stabilize the massive operational burn rate.

For a deep dive into how enterprises are structuring their *own* AI spending—whether they are choosing to build custom solutions or buy off-the-shelf platforms—check out our analysis on the Enterprise AI Buy vs. Build Strategy.

Critical Variables Influencing Future Financial Stability

Escaping a projected deficit isn’t just about knocking on doors asking for a bigger check. The true path to long-term stability is embedded in the structure of existing deals and the science of computing itself. These variables can either act as financial anchors or provide much-needed lift.

The Flexibility to Adjust Pre-Agreed Compute Commitments. Find out more about AI investment cycle peak indicators guide.

Here’s the immediate, make-or-break factor: contract negotiation. The current financial model for several major AI labs assumes a rigid, inflexible adherence to those multi-hundred-billion-dollar cloud agreements. Think of it like signing a multi-year, non-cancellable lease on a fleet of supercomputers. If the contract terms are hard, “take-or-pay” clauses mean the organization is effectively operating with a non-cancellable, multi-year lease on computational resources it might not be able to fully utilize yet. This transforms a potential *future* gap into an immediate, crushing liquidity threat.

The organization’s most immediate risk-mitigation tool is its ability to renegotiate the volume or timing of capacity consumption with its major cloud partners like Oracle, Microsoft, and AWS. If contract terms allow for ‘cooling off’ periods or variable drawdowns based on actual usage milestones, the immediate cash-flow pressure can be managed. Navigating these complex contractual landscapes is now as critical to survival as advancing model architecture. The market is watching closely to see if these massive commitments are treated as fixed debt or as scalable services—a distinction that could mean the difference between a manageable slowdown and a crisis. We analyzed the legal implications of these large-scale cloud deals in our piece on Cloud Contract Flexibility and AI Scale.

- Best Case: Renegotiate a tiered payment structure tied to key product revenue milestones.

- Worst Case: Hard “take-or-pay” minimums force the organization to pay for idle compute capacity, accelerating the burn rate.

The Unpredictable Nature of Technological Efficiency Gains

While contracts create the immediate problem, technological innovation offers the long-term salvation. The economic viability of deploying these massive models is intrinsically linked to how quickly the industry can get more computational “bang for its buck.” Every incremental optimization in the software stack or the development of specialized, more energy-efficient hardware accelerators effectively lowers the future cost of serving every single user.. Find out more about AI investment cycle peak indicators tips.

This is the Jevons Paradox applied to computation: as the cost to *run* an AI operation drops, the *demand* for total operations skyrockets, but the cost per marginal operation is lower. If the industry hits a breakthrough in memory management or algorithm design that cuts inference costs by even 5% across the board, the cumulative savings over the next five years could be colossal. This technological arbitrage remains the most optimistic, though least certain, element in the current financial equation. It’s the hope that Moore’s Law—or its modern equivalent in AI accelerators—will outpace the current spending spree. You can read more about the race for efficiency in our report on The AI Hardware Accelerator Race.

A useful framework for thinking about this is the current state of enterprise AI projects themselves. Research suggests that while AI *can* boost productivity by 25% in speed and 40% in quality for certain tasks, 30% of enterprise generative AI projects are expected to stall due to escalating costs or unclear value. If the foundational *applications* are struggling to show immediate, scalable ROI, the massive *infrastructure* bets must slow down or face a reckoning.

Implications for the Wider Technology Alliance Network

The financial health of this AI pioneer isn’t happening in a vacuum. It’s the central node in a vast, interconnected web of technology suppliers whose own growth forecasts have been aggressively built around the pioneer’s expansion promises. When the center trembles, the whole web vibrates. This interdependency creates a system where mutual success is deeply woven, and instability at the core transmits measurable shockwaves outward.

Assessing Vulnerabilities Across the Supplier Chain

Who is most interwoven? The analysis explicitly names the major cloud providers (AWS, Azure, Oracle), the chip manufacturers (primarily Nvidia), and the key institutional investors. For these partners, the AI pioneer’s aggressive expansion plans—like the hundreds of billions in commitments to cloud services—have been baked into their own capital expenditure forecasts for years. They provisioned capacity, ordered future chip fabrication slots, and hired staff in *good faith* based on these expansion trajectories.. Find out more about AI investment cycle peak indicators strategies.

A sudden, sustained deceleration in the organization’s purchasing power—or, worse, a failure to secure the next funding round—forces these suppliers to contend with excess capacity they provisioned for the next wave of growth. This directly impacts their own earnings guidance, inventory levels, and, yes, their stock performance. Remember the volatility Oracle experienced after announcing its massive cloud deal? That’s a clear example of the market pricing in the counterparty risk associated with these colossal, front-loaded agreements. The system is designed for mutual uplift; when the primary engine sputters, every piece of machinery hitched to it feels the strain.

Consequences of Potential Downscaling on Partner Ventures

Should this leading organization be forced into a drastic downscaling—an attempt to right-size spending to its *current* cash generation reality—the consequences for its partners would be immediate and selective.

Consider the immediate impact:

- Cloud Service Partners: Could face billions in stranded, pre-provisioned capacity—a sunk cost that hits their operational margins immediately.. Find out more about AI investment cycle peak indicators overview.

- Chipmakers: Might see previously guaranteed, multi-year orders deferred, canceled, or at least slowed down, impacting their own future revenue guidance and capital investment plans.

- Equity Holders: A forced retrenchment would likely lead to a sharp, immediate devaluation of their holdings, unless the organization can successfully pivot to a profitability-first posture.

- Demand Proof Over Promise: Don’t sign massive, long-term consumption agreements based only on next-generation capability roadmaps. Scrutinize the ROI from current, deployed use cases. If your internal AI pilots are stalling, scaling up your investment now is premature.

- Embrace the 80/20 Rule: Prioritize the 80% of your needs that can be met by proven, subscription-based vendor platforms, which offer predictable costs and outsourced R&D. Reserve custom “build” for truly proprietary, high-differentiated tasks.

- Inquire About Cost Transparency: Just as the big labs need flexibility in their cloud contracts, your enterprise needs flexibility in your vendor contracts. Look for clear pricing models that scale down as easily as they scale up.

- Due Diligence on Compute Contracts: Capital scrutiny must now focus intensely on contractual obligations. Are the cloud commitments structured as hard debt or flexible service agreements? This is the primary measure of liquidity risk.

- Value Enterprise Integration: Favor AI companies that can demonstrate concrete, recurring revenue from deep enterprise integration over those whose valuation relies solely on the promise of mass-market consumer adoption. Real-world utility, like the cost savings shown by players like Procure AI, is the new benchmark for early profitability.

- Track Efficiency Gains: Monitor the pace of software and hardware optimization. A sustained drop in inference cost could fundamentally reset the economic model, making current high-burn strategies viable in the long run—but don’t bet on it happening tomorrow.

The current trajectory, as illuminated by the projections of a major shortfall, demands a level of sustained, multi-year capital access that few entities in the commercial world have ever successfully maintained without a clear, imminent path to sustained free cash flow generation. The unfolding story of how this financial gap is managed will serve as a crucial case study for the entire capital-intensive AI industry for the remainder of the decade.

For more on how other players are navigating this intense environment, see our backgrounder on AI Investor Sentiment and Market Pressures in 2025.

Actionable Insights for Navigating the Peak Cycle

So, what do business leaders, developers, and investors take away from this high-stakes financial drama playing out on the AI stage? The era of blind faith in capital availability is over. The emphasis must shift from pure growth to strategic resilience.. Find out more about Renegotiating multi-year cloud compute commitments flexibility definition guide.

Key Takeaways & Actionable Insights for Today

For Enterprise Buyers and Users:

For Investors and Lenders:

The narrative is shifting from “How fast can we grow?” to “How efficiently can we operate this immense scale?” This moment, defined by massive funding gaps and a frantic search for real-world revenue, is the necessary correction that will likely clear the excess speculation and pave the way for a more durable, sustainable AI industry over the rest of this decade. We are transitioning from the speculative buildout phase to the sober reality of realizing a return on the trillion-dollar bet.

What are your thoughts on the sustainability of the current AI spending trajectory? Are you seeing enterprise adoption move fast enough to justify the infrastructure outlay? Share your perspective in the comments below—we’re all in this together, navigating the data centers and the balance sheets.