Beyond the Clock: Recalibrating the AI Value Proposition for the C-Suite

For nearly two years, the headline metric for every AI implementation has been time savings. And yes, the data is compelling enough to mandate adoption: the average enterprise worker is now reporting reclaiming forty to sixty minutes per active day thanks to generative AI tools. For power users in data science and engineering, that figure spikes to 60 to 80 minutes saved daily. That’s almost a full day every week—a productivity gain that managers love to see on timesheets. However, in the 2025 economy, that efficiency metric is table stakes, not strategic differentiation. The conversation must evolve, and it must evolve to speak the language of the Chief Financial Officer, who is now intensely focused on strategic advantage and revenue impact.

The future revenue narrative hinges on qualitative, non-time-based value. The CFO isn’t just looking for less time spent on email drafting; they are looking for market share expansion and defensible margins. The shift requires demonstrating how AI enables entirely new capabilities that were previously science fiction:

- Novel Insight Generation: The ability to synthesize massive, previously inaccessible or siloed datasets (like combining customer service transcripts with complex financial modeling outputs) to generate a strategic insight that directly informs a market move.

- Accelerated Time-to-Market (TTM): Quantifying the reduction in the product development lifecycle. If a 12-month product cycle is cut to nine months due to AI-assisted coding, testing, and content generation, the resulting early revenue capture is a direct, unassailable ROI driver.

- Compliance and Accuracy Transformation: In regulated industries, moving from a 95% compliance accuracy rate (with human review overhead) to a consistent 99.9% accuracy, reducing significant regulatory fines or operational risk exposure. This translates directly into risk-adjusted earnings.

The data confirms this pivot is already underway. A recent survey shows the share of CFOs reporting very positive ROI from generative AI has skyrocketed from 27% to 85% over the last year. This is the shift we’re analyzing. When revenue leaders can present a case built around new revenue streams, lowered regulatory risk, or market acceleration—rather than just cost avoidance—the technology moves from an IT expense to a core strategic investment. For teams looking to formalize this, digging into advanced AI ROI measurement frameworks is no longer optional; it’s the prerequisite for securing the next budget cycle.

The CFO’s Metrics: From Hourly Efficiency to Strategic Moats

Managers worry about the manager’s focus: hourly efficiency. They see time saved per task. Strategic leaders must present to the CFO a narrative built on three pillars:

The narrative needs to move from, “We saved 50 minutes,” to, “We achieved the same result 40% faster, capturing Q4 revenue in Q3.” That’s the language of shareholder value, and it’s the only language that ensures sustained funding for the next generation of models.

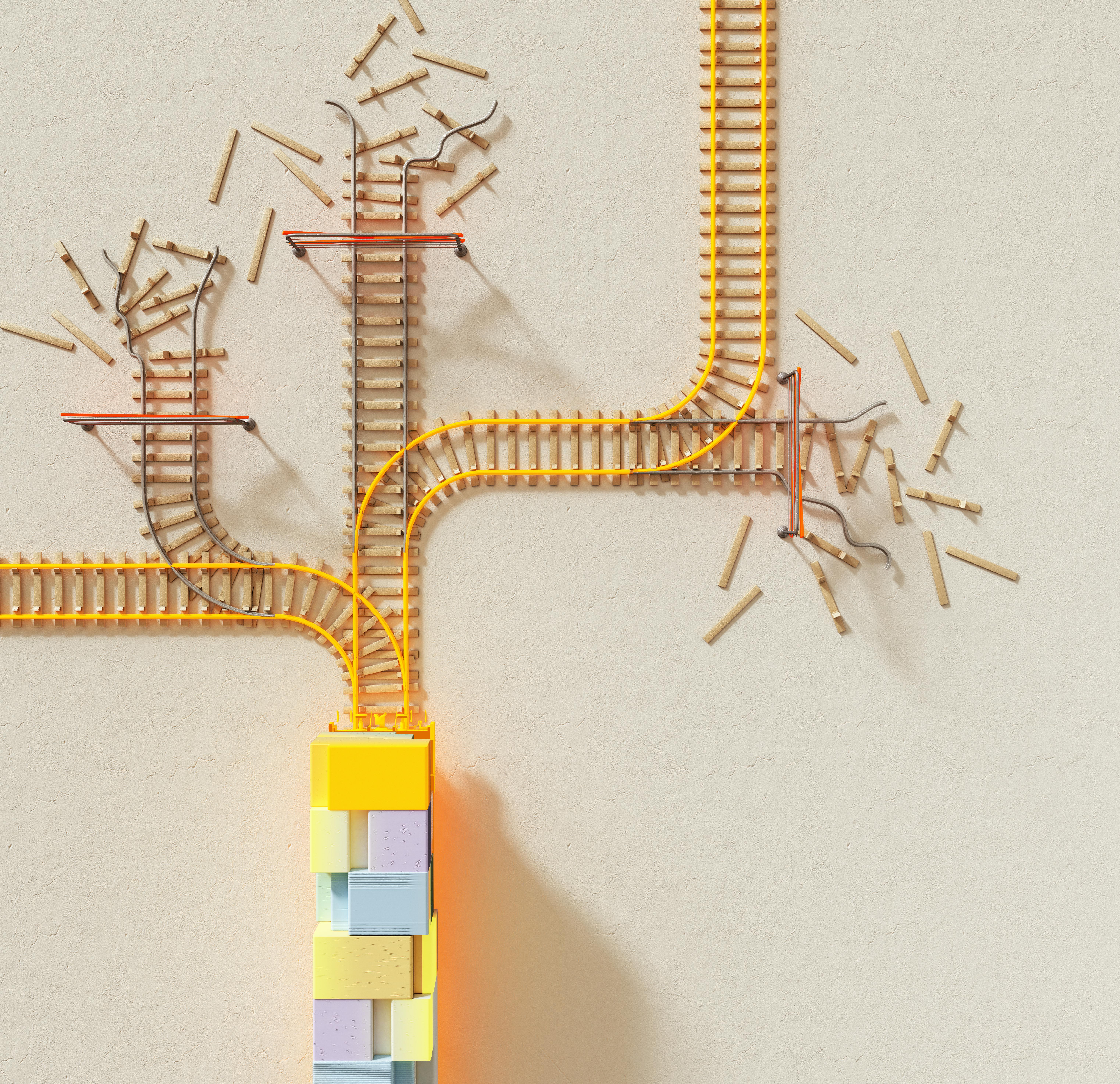

The Looming Danger of Competitive Platform Lock-In

As enterprises move from simple querying in a web interface to embedding AI models via APIs into their customer-facing products and mission-critical internal systems, they are implicitly signing up for a multi-year, multi-million-dollar commitment. This is the silent, yet perhaps most dangerous, risk of accelerated adoption: vendor lock-in.

When an organization’s core intellectual property (IP) begins to be woven directly into the API calls—when the workflow logic, the fine-tuning data pipelines, and the core user experience rely on the proprietary functions of a single provider’s large language model (LLM) architecture, switching providers becomes an existential corporate crisis. The switching cost is no longer measured in developer hours to rewrite code; it’s measured in the potential devaluation of the customer experience or the complete loss of critical institutional memory encoded in the system.

The Infrastructure Trap: When Tool Provision Becomes Strategic Alignment

The sales pitch that secured the initial deal might have been about transactional tool provision: “Best performance, best price for token usage.” The reality that emerges later is one of infrastructural dependence. This transition forces a fundamental re-evaluation of the vendor relationship. It must move from transactional tool provision to long-term strategic alignment. The developer platform must not just be good; it must be indispensable.

What does indispensable look like in 2025? It means:

If the platform meets these criteria, the customer *wants* to stay, not because they *have* to, but because the alternative means compromising on speed, security, or functionality. This strategic positioning is the only true defense against predatory pricing shifts or unexpected platform changes from the provider. Leaders must start thinking about their vendor relationship as a managing vendor platform risk strategy from day one, asking not just “What does this cost now?” but “What would it cost us to rebuild this logic on a different cloud provider’s infrastructure in 36 months?”

The Race Against the Curve: Model Efficiency vs. Revenue Growth

The innovation engine of the AI sector is a double-edged sword. Every quarter brings a newer, more capable, and often significantly more expensive frontier model. The enterprise adoption trajectory is in a direct, high-stakes race against the accelerating cost curve of model training and inference.

Training a state-of-the-art model—think GPT-4 level or beyond—still costs tens to hundreds of millions of dollars in compute alone. While one recent estimate put GPT-4’s compute cost at $78 million, Google’s Gemini Ultra was estimated even higher at $191 million in 2024 compute. These initial training costs are sinking massive capital into the infrastructure, meaning the ongoing cost of running those models (inference) must be aggressively managed for profitability.

The Inference Economics Tightrope Walk

The good news? Inference costs are collapsing due to competitive pressure and architectural breakthroughs. The API price war of 2025 has been brutal; one major provider slashed input token costs by 90% for its successor model within 16 months. This competition is fantastic for the enterprise user base—the cost-per-token is dropping rapidly.

The danger, however, emerges when the revenue leader’s contract structure (often based on tiered consumption or static pricing) cannot keep pace with the per-token cost they are incurring internally to serve their own customers. If the price of inference for these next-generation, more capable systems—which enterprises inevitably demand—continues to rise faster than the per-token revenue secured from the enterprise contracts, profitability remains elusive. The CRO’s P&L becomes a race against the infrastructure bill.. Find out more about Enterprise AI revenue strategy development tips.

This demands a tight coupling between commercialization strategy and technical milestones. The revenue team cannot simply sell the best *available* model; they must sell the best *economically viable* model for the use case, tied to the research division’s advancements in efficiency. The mandate for the revenue leadership is to:

The revenue strategy is, therefore, the financial engine underwriting the research quest. A failure on the revenue curve means a failure to fund the quest for Artificial General Intelligence (AGI)—a topic we will return to.

The Internal Chasm: Bridging the AI Readiness and Talent Gap

The uneven adoption trajectory isn’t just between companies; it’s starkly evident within a single enterprise. While “frontier firms” are generating twice the message volume per seat as the median company, other departments within the same organization are barely scratching the surface. This wide gap in usage—between the most advanced users who are building custom GPTs and the lagging users who only use basic text generation—points to a market-wide internal talent and training gap.

The industry is stalled not just by technology integration, but by human capacity. Data from late 2025 shows that a staggering 71% of AI teams spend over a quarter of their time on “data plumbing” rather than innovation, highlighting a skills deficit in foundational data architecture required to feed these models. Compounding this, many L&D leaders report only moderate success in upskilling, and only 30% of companies globally believe they have enough skilled talent to scale their AI projects.

Enablement as the New Sales Strategy

For a revenue leader, selling premium-tier AI tools into an environment where only 28% of employees know how to use their company’s existing AI applications is setting yourself up for high churn and low expansion revenue. The sales team cannot simply be tool providers; they must be transformation consultants. Their mandate must expand beyond closing the deal to ensuring the customer actually realizes the value they paid for.. Find out more about Enterprise AI revenue strategy development strategies.

This means the revenue strategy must now encompass an entire ecosystem view. Actionable advice here centers on enterprise-wide AI upskilling enablement:

This shift acknowledges that the true competitive advantage isn’t the model itself, but the organizational structure and talent that can wield it effectively. This is the core of the “AI ambition gap”—the difference between *wanting* AI and having the *operational maturity* to execute on it.

The Talent War Escalates: Setting New Executive Benchmarks

The recent, high-profile poaching of a sitting CEO from a major, publicly-traded, cloud-adjacent software platform to lead a pure-play AI developer sends a shockwave through the entire technology sector. This event, occurring in late 2025, is more than just a headline; it’s a definitive market signal that changes the calculus for everyone.

The Price of Commercial Leadership in AI

This talent move signals that the financial incentives and perceived opportunity at the bleeding edge of AI creation—the firms building the next computational paradigm—are now powerful enough to lure the top commercial operators away from the established, often more predictable, high-growth roles at legacy cloud giants. This inevitably drives up compensation and dramatically increases the strategic importance placed on the Chief Revenue Officer (CRO) and commercial leadership within every emerging AI platform company fighting for market share.

This dynamic is set against a backdrop of slowing global talent mobility. In 2025, the global movement of highly skilled professionals, including AI and STEM talent, fell by 8.5%. Yet, despite this slowdown, specific hubs like the US continued to gain share of highly skilled inflows. The executive poaching suggests that the lure of AI’s potential, combined with commensurate financial reward, is strong enough to counteract broader macroeconomic caution and mobility slowdowns for the absolute top-tier talent.. Find out more about Enterprise AI revenue strategy development overview.

Forcing Competitors to Reassess Their Go-To-Market Focus

When a leading AI company elevates a revenue specialist with deep, battle-tested enterprise platform experience, it’s a public declaration that the technology is deemed mature enough to support a dedicated, seasoned sales apparatus. Competitors—especially the large technology firms backing their own LLM efforts—are now compelled to match this focus. If they are still relying on general-purpose enterprise salespeople who cut their teeth selling storage or database licenses, they risk appearing behind the curve.

Expect a rapid professionalization and standardization of enterprise AI sales across the entire industry as rivals scramble to hire leaders with similar pedigrees. The market is demanding a sales force that understands not just the model’s potential, but the customer’s integration reality—the very data plumbing issues cited earlier. This executive mobility sets the new bar for who is qualified to scale AI monetization.

Trust, Mission, and the Transactional Lens

Every time a company aggressively hires a revenue chief and prioritizes profitability metrics, it introduces a short-term test for customer trust. Customers who initially engaged with the AI developer as a partnership built on cutting-edge research ideals—a noble quest—may now view the relationship through a more purely transactional, vendor-client lens. The alignment between research idealism and commercial reality is crucial.

Proactively Managing the Perception Gap

The new revenue leadership has a clear mandate to manage this perception proactively. Their customer success charter must double down on demonstrating that the drive for profit is enabled by, not *detrimental* to, delivering world-class utility.

This means:

The message must be: We are successful *so that* we can continue funding the research that makes your business exponentially better. The transaction funds the mission, not the other way around.

The Ultimate Financial Engine: Underwriting the Long-Term AGI Bet

Ultimately, the entire commercial push—the aggressive pursuit of multi-billion-dollar enterprise contracts, the massive investment in AI infrastructure, and the frantic hiring of commercial leaders—is predicated on one central, multi-decade bet: that the current trajectory of large model development will lead to Artificial General Intelligence (AGI).

The revenue generated today from selling today’s models (which are already providing measurable value, with 75% of users reporting improved speed or quality of output) is not just profit; it is the essential funding mechanism. It bridges the financial gap between the current state of narrow AI and the potential future, exponential capability of AGI.

The CRO: The Unsung Research Partner

The Chief Research Officer (CRO) chases the next breakthrough in the lab, requiring massive, sustained capital infusions that are often too large for traditional venture funding alone. The Chief Revenue Officer (CRO), therefore, becomes the essential financial engine underwriting this ambitious, multi-decade quest. The CRO’s role is not secondary to the Chief Research Officer; it is **as critical to the ultimate mission** as the lead scientist’s.

Without revenue growth outpacing the escalating cost curve of training and deployment, the fundamental research mission stalls. This is why executive mobility is so pronounced: the market understands that commercial execution today is the direct pre-condition for realizing the AGI promise tomorrow. The executive stepping into this role is not just signing up for quarterly targets; they are signing up to finance the next computational era.

Conclusion: Key Takeaways for Navigating the 2025 AI Race

The acceleration of enterprise AI adoption in late 2025 is undeniable, but the growth is structurally uneven and fraught with peril. Success demands a sophisticated, dual-focus strategy that balances immediate revenue generation with long-term infrastructure defense and talent enablement. As you step into this dynamic environment, keep these actionable insights at the forefront:

Actionable Takeaways for Enterprise Leaders:

The race is on. It’s no longer a sprint for proof-of-concept; it’s a marathon for sustainable, profitable, and deeply integrated enterprise transformation. Are your value metrics strategic enough to fund the next decade of innovation?

Call to Engagement:

What is the single biggest metric your executive team is using to justify AI spend beyond simple cost savings in late 2025? Share your insights below—let’s build a common understanding of what true AI value looks like right now.