The Foundation: Full-Stack Supremacy from Chip to Cloud

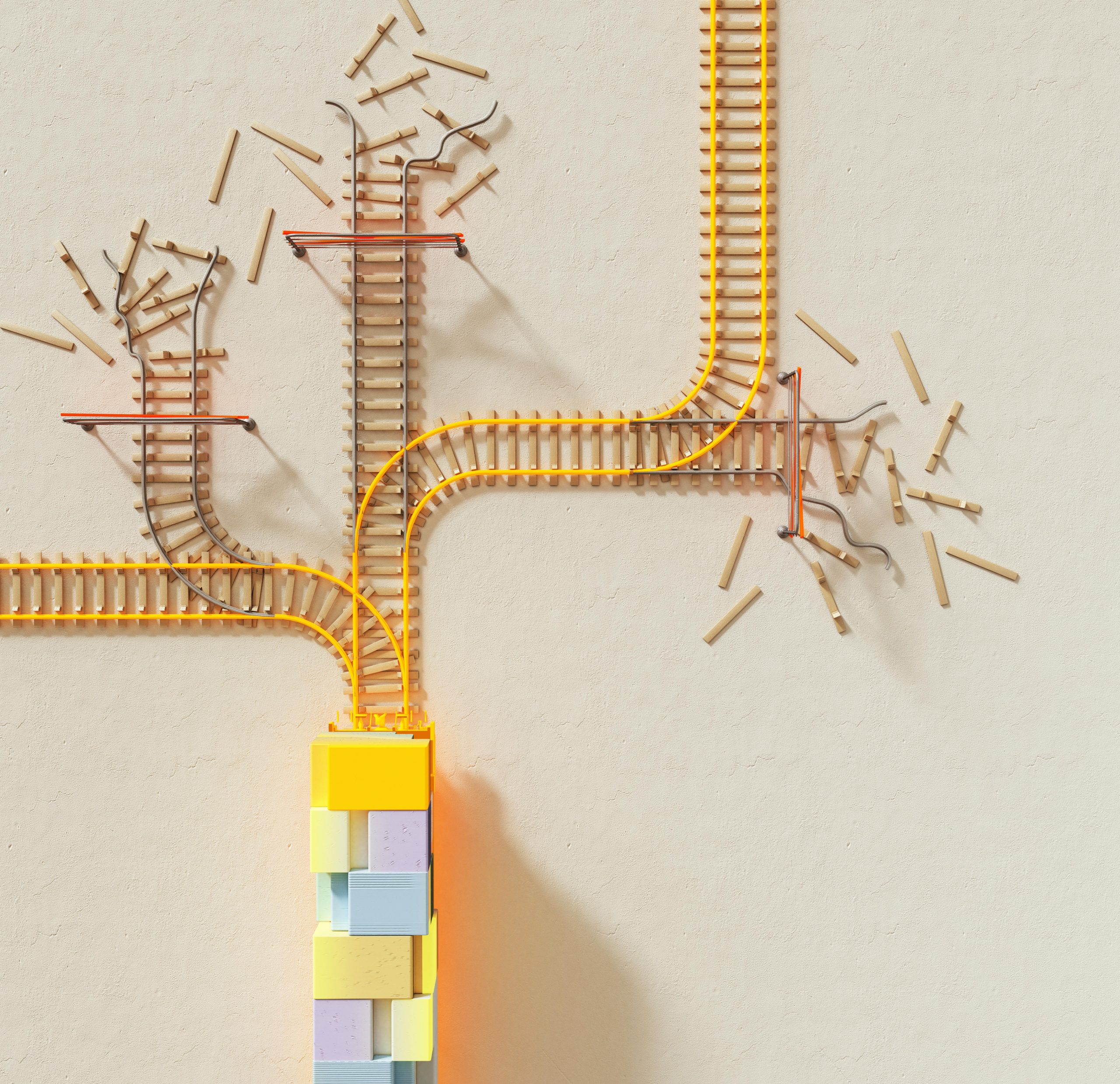

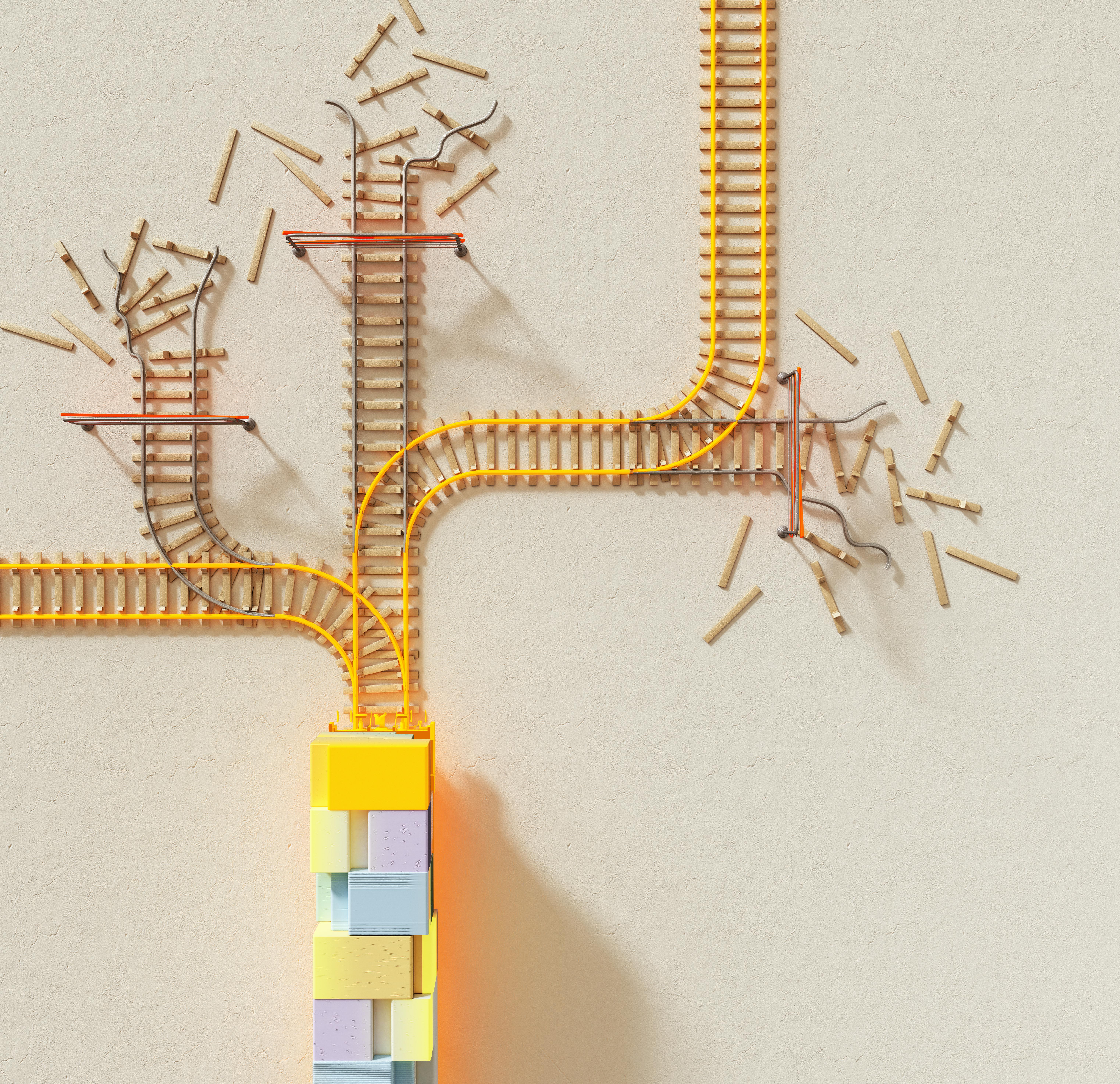

The most profound, structural advantage being leveraged with the launch of Gemini Three is what industry commentators have dubbed the “full-stack” approach. This isn’t a buzzword; it’s a description of complete ownership over the entire artificial intelligence development and deployment pipeline. Unlike many of its closest rivals—who might be waiting on external suppliers for foundational compute or platform access—this company controls the entire chain of creation and delivery.

Vertical Integration from Silicon to Service

Think about the journey of a model like Gemini Three. It begins with initial conceptual research deep within the elite Google DeepMind divisions. From there, it moves directly to highly specialized training, not on generic, off-the-shelf hardware, but on custom-built, in-house infrastructure. Finally, that perfectly optimized model is deployed across a vast, established global network of Google Cloud services and countless consumer-facing applications. This end-to-end mastery is the engine room of their speed.

The CTO of Google DeepMind explicitly cited this deeply integrated structure as the essential enabler for moving with greater speed and decisiveness in the development cycle. This structural coherence creates a virtuous cycle that is simply unattainable for less integrated entities: every improvement in the silicon directly benefits the model architecture, and every finding from real-world application feedback immediately informs the next research iteration. It’s this tight coupling that accelerates progress far beyond the reach of organizations dependent on procuring foundational components from third-party suppliers.

Proprietary Hardware as a Competitive Moat

Central to this full-stack advantage is the company’s continuous investment in and deployment of its proprietary Tensor Processing Units, or TPUs. These custom-designed chips are architected from the ground up specifically to handle the unique computational demands of Google’s enormous machine learning models. They offer superior performance-per-watt and cost-efficiency for both training and inference compared to general-purpose hardware available on the broader market.

While competitors must conform their model designs to the constraints and cost structures dictated by the wider hardware ecosystem, Google’s hardware teams operate in lockstep with the Gemini model architects. This tight coupling means that the hardware is perfectly tailored to run the software, optimizing everything from the largest context window management to the speed of the most complex reasoning operations. This in-house capability represents a substantial capital and expertise barrier to entry, effectively creating an infrastructural moat that is incredibly difficult, if not impossible, for newer entrants to cross.

Fact Check: The Latest Silicon Advantage

- Google recently unveiled its seventh-generation TPU, codenamed Ironwood, which is set for general availability in Q4 2025.

- Ironwood offers a 10X peak performance improvement over the previous v5p and more than 4X better performance per chip for both training and inference compared to the older v6e (Trillium).

- This custom silicon is purpose-built to overcome the “data bottlenecks for the most demanding models,” like the newly launched Gemini Three.

- Alphabet is doubling down: After earmarking $75 billion for capital expenditures earlier this year, the company boosted its 2025 CAPEX target to $85 billion to meet the intense demand for AI and Cloud infrastructure.

- Cloud is maturing: Google Cloud, powered by AI services like Vertex AI, is showing strong results, reporting $10.3 billion in revenue for Q2 2025, up 32% year-over-year, signaling a shift toward profitability from these AI investments.

- Strategic Luxury: This financial solidity translates into a strategic luxury—the capacity to offer certain cutting-edge models for free or to deliberately undercut rivals on pricing for enterprise access purely to drive adoption and establish dominance in user habits. If prioritizing market penetration over short-term profit is the strategic choice, the existing economic structure can absorb it—a fiscal flexibility absent in many rivals’ operational models.

- A perfectly formatted, step-by-step technical manual (Text).

- A set of corresponding CAD-ready diagrams (Image/Code).. Find out more about Google full-stack AI supremacy guide.

- A dynamically generated interactive 3D model walkthrough, explained in the engineer’s own voice (Agentic/Audio Synthesis).

- Outperforms Gemini 3 Pro on Humanity’s Last Exam (41.0% without tools).

- Achieves an unprecedented 45.1% on ARC-AGI (with code execution).. Find out more about Google full-stack AI supremacy tips.

- Scores 93.8% on the incredibly rigorous GPQA Diamond benchmark.

- Gemini App: Direct access for all users, with Pro capabilities available to subscribers.

- Google Search (AI Mode): The main public entry point, available to AI Pro and Ultra subscribers for augmented search results.

- Developer Platforms: Available in Gemini API in AI Studio (Google Antigravity) and Vertex AI for enterprise deployment.

- Some analysts describe recent stock dips as a healthy correction following months of record values, taking risk off the table.

- Concerns center on the massive investment in data centers and whether AI-generated revenues will justify the current infrastructure spending, which some estimate could reach $6.7 trillion globally by 2030.. Find out more about Gemini TPU proprietary hardware moat insights guide.

- Despite the apprehension, many analysts, like Wedbush’s Dan Ives, still view the AI growth as a “4th industrial revolution” that is unlikely to slow into 2026, suggesting the Google investment is well-placed.

- Speed is Integrated: Optimization happens at every layer, from the chip to the final user interface, making iteration faster.

- Cognition is Multimodal: Gemini Three’s native understanding across all data types unlocks synthesis that was previously impossible.

- Agents are Real: The focus is now on autonomous workflow execution, driven by tools like Google Antigravity, not just conversation.

- Market Resilience: The company’s massive revenue base allows it to play a long game in market penetration, even while facing concerns about a broader tech investment bubble.

- Experiment with Multimodality: Stop treating text and vision as separate prompts. Start testing complex, simultaneous input scenarios in the new AI Mode in Search to experience the cross-pollination effect firsthand.

- Evaluate Agentic Workflows: For your enterprise, identify a tedious, multi-platform process—like data pipeline setup or customer onboarding—and begin piloting it with the new agentic tools accessible via Vertex AI.

- Understand the Moat: When assessing new AI providers, don’t just look at the model’s benchmark scores. Ask about their compute infrastructure. A company reliant on external wafer fabrication or chip supply is structurally constrained compared to one designing its own silicon.

The sheer scale of their existing TPU deployment provides an immediate, massive computational runway for the most demanding models, ensuring the computational cost of maintaining a performance lead remains manageable internally, even as training expenses skyrocket across the industry. For the next stage of AI, having control over the inference chip—as highlighted by the upcoming Ironwood general availability—is just as crucial as controlling the training hardware.

Financing the Exponential Cost of Progress

Cutting-edge AI development today requires astronomical capital expenditure. Training a flagship model, like the Ultra or Pro versions of Gemini Three, can easily run into the hundreds of millions of dollars. This reality exerts significant financial pressure on less capitalized enterprises.. Find out more about Google full-stack AI supremacy.

This is where the other side of the full-stack coin—Google’s established, multi-faceted revenue streams—provides a unique economic buffer. Originating from search advertising, cloud services, hardware sales, and enterprise software, these income streams insulate the AI division from the immediate, relentless need for monetization that plagues many startups.

Key Financial Takeaways (Mid-to-Late 2025):

It’s a demonstration of strategic patience. When the market wobbles—and recent analyst chatter has definitely included sober discussions about an AI bubble—Google’s ability to fund its R&D directly from its core engine is the ultimate strategic hedge.

The Technical Leap: Core Innovations of Gemini Three

The structural advantages discussed above are the *how*, but the capabilities of Gemini Three are the *what*. This release represents a significant architectural maturation, moving the needle on several key performance indicators, particularly in synthesis and complex cognition.

Native Multimodality and Cross-Pollination Effects

Gemini Three marks a significant leap by being designed as a natively multimodal system. This is a crucial distinction from models that historically “bolted on” capabilities for different data types after the initial text-based training. Gemini Three was trained from its inception to process, understand, and generate across text, high-resolution images, audio streams, and video sequences simultaneously, without relying on clumsy intermediate translation layers.

The benefit of this architecture is a phenomenon described as “cross-pollination.” The understanding gained from one modality intrinsically improves the performance in another. For instance, grasping the visual context from an image lecture can enrich the textual summarization of that same content. Or, complex audio cues can inform the generation of accompanying visual aids. This holistic grasp allows for uniquely rich use cases that move far beyond simple captioning or descriptive text generation.

Practical Multimodal Application: Imagine feeding Gemini Three a raw video of a complex engineering process, along with the engineer’s spoken narration. The model can now output:

This level of synthesis is what CEO Demis Hassabis has spoken about—combining state-of-the-art reasoning with vision and spatial understanding, all supported by an unprecedented 1 million-token context window.

Revolutionizing Code Generation Through Intuition

For the software world, the advancements in code generation capabilities within Gemini Three are poised to redefine workflows. This goes well beyond merely completing lines of code or debugging simple errors. The model excels in the emerging domain of “vibe coding.”

What is vibe coding? It is where the intent of the developer is communicated through high-level, conceptual, and often abstract natural language prompts, rather than rigid, explicit syntax. This shifts the developer’s role from a meticulous typist to a high-level system architect guiding an incredibly fast junior partner. It’s about stating the *goal*, not dictating every step.

Code Performance Data: Benchmarks specifically targeting terminal-based coding efficiency show Gemini Three leading previous iterations and key competitor models. For example, it reportedly tops the WebDev Arena leaderboard with an Elo score of 1501, surpassing the previous leader, Gemini 2.5 Pro (1451).

Furthermore, the integration of richer visualizations directly into the coding output provides immediate, concrete feedback. A developer can see the functional result of their high-level directives instantly, bridging the gap between thought and execution. This promises to dramatically increase productivity in the software creation lifecycle. New tools like Google Antigravity are built around this agentic coding capability, transforming AI assistance into an active partner within the developer’s IDE.

The Deep Think Mode and Enhanced Problem Solving

For the most challenging, multi-layered cognitive tasks, Gemini Three introduces a dedicated “Deep Think” mode. This feature acknowledges a computational reality: some problems require dedicated, prolonged focus rather than immediate, fast output. This is a direct response to the need for greater cognitive depth in AI systems.

Deep Think mode allows the model to dynamically allocate significantly more computational resources and processing time to wrestle with a complex query. It breaks the problem down into smaller, logical sub-problems before synthesizing the final, comprehensive answer. This mechanism deliberately mimics human deliberation, granting the AI the necessary time to traverse intricate logic trees or perform extensive, iterative self-correction.

Consider the competitive landscape: While OpenAI simultaneously released its GPT-5.1 Thinking variant—which also focuses on allocating compute based on complexity—Google is positioning its Deep Think mode as the key to superior scientific and mathematical performance.

Deep Think Mode Benchmarks (Ultra Subscriber Exclusive – Coming Soon):

Where standard, fast-response models might fail or resort to superficial answers when faced with truly novel or abstract problems, Deep Think mode is designed to pursue a solution with the tenacity of a dedicated researcher. This positions Gemini Three as a superior tool for critical decision support in fields like complex scientific modeling and advanced mathematical proofs.

Agentic Capabilities and Enterprise Transformation

The true measure of AI utility in the enterprise is shifting from merely answering questions to actively executing projects. The next frontier of applied AI is the development of sophisticated, agentic systems capable of handling multistep, complex workflows with minimal human oversight. Gemini Three is positioned to lead this charge.

Autonomous Workflow Execution Beyond Chat

The core of this shift is the focus on what the company terms a powerful new digital “agent” for creating applications on command. This capability completely transcends the limitations of a simple conversational interface. Instead, the model can be tasked with an entire objective—such as setting up an automated data pipeline, managing an entire customer engagement sequence, or building a functional, interactive software prototype—and then autonomously coordinate the necessary steps, utilizing various internal and external tools along the way.

This transition from passive assistant to active executor promises to automate entire classes of white-collar work that currently require significant human project management and coordination between disparate software platforms. This is the promise of true enterprise AI adoption.

Enhancing Business Operations with High-Fidelity Agents

The deployment of Gemini Three builds directly upon specialized tools for data science and customer engagement agents announced throughout 2025. The inherent reasoning and multimodal strengths of the new core model directly enhance the reliability and scope of these autonomous agents.

Case Study in High-Fidelity Synthesis: For a business analyst, this means an AI agent tasked with synthesizing market feedback from a massive corpus—social media text, video testimonials, and transcribed sales call audio—can now not only ingest and process all that data natively but also generate a sophisticated, data-backed strategic presentation, complete with custom-coded visualization tools, all within one integrated request. No more stitching together outputs from three different specialty models!

This high-fidelity operational capability promises to unlock significant efficiency gains across back-office, customer-facing, and internal analytics departments. It moves the technology past the novelty stage and embeds it as a mission-critical component of corporate infrastructure, thereby accelerating the return on investment for organizations that commit to adopting the platform.

Mass Distribution as the Final Lever

A state-of-the-art model is only as effective as its reach. Google’s strategy for Gemini Three has been aggressively focused on integrating it into the existing, massive user pathways they already own, ensuring maximum, frictionless adoption.

Immediate Embedding into the Search Ecosystem. Find out more about Google full-stack AI supremacy strategies.

The decision to debut Gemini Three directly within the flagship search engine, accessible via a simple click on the newly designated “AI mode,” is arguably the most aggressive distribution tactic seen in the competitive AI landscape. By bypassing the need for users to navigate to a separate website or download a dedicated application, the company immediately places its most advanced technology in front of its nearly two billion monthly search users.

This frictionless access capitalizes on established user habit loops. The moment a user seeks information, the most advanced intelligence available is there to augment or replace the traditional ten blue links. This strategy is designed to rapidly acclimate a massive user base to the superior quality and new interaction patterns of the Gemini interface, quickly normalizing its capabilities and making older, less intelligent modes of information retrieval feel instantly dated. It’s a masterstroke of distribution that leverages the company’s core business function as the primary on-ramp to its next-generation AI services.

Leveraging the Existing Global Footprint of the Gemini Application

Complementing the search integration is the simultaneous enhancement of the dedicated Gemini application. While exact, final user numbers for November 2025 are still being finalized, the established base already commanded hundreds of millions of active monthly users prior to the launch [cite: 27, referencing earlier 2025 numbers]. This installed base represents a significant, pre-qualified audience ready to engage with the new feature set.

For these existing users, the update is an immediate and substantial upgrade to their daily digital assistant, featuring the new multimodal prowess and advanced agentic tools built directly into the mobile and web interfaces they already use. This provides a direct pathway for testing and refining the most advanced features in a controlled, yet large-scale, environment before wider enterprise rollouts.

Key Distribution Channels for Gemini Three Pro (As of Nov 18, 2025):

The synchronization between the core search integration and the application update ensures a comprehensive market saturation strategy, where users encounter the power of Gemini Three whether they are actively researching a topic or simply utilizing their preferred conversational AI tool on the go.

The Competitive Dynamic with the Incumbent Leader

While Google is leveraging its structural depth, the competitive dynamic remains fierce. The landscape is shaped by one major factor: brand ubiquity, and one structural weakness: external dependencies.

Confronting the Power of Brand Recognition

Despite the clear technological advancements embodied by Gemini Three, OpenAI retains a formidable, if somewhat ephemeral, advantage in the public consciousness—the powerful, almost genericized brand recognition of its flagship offering. This phenomenon—sometimes likened to the “Kleenex effect”—grants the incumbent an automatic default consideration in the minds of the general public and less technically astute users.. Find out more about Google full-stack AI supremacy insights.

Google’s challenge is not merely to build a better model; it is to actively break this deeply ingrained consumer association. The immediate integration into Search—where people already go for answers—is a calculated attempt to overwrite that association by demonstrating superior, integrated utility. The goal is to convert habitual users through superior, immediate experience rather than relying solely on abstract benchmark claims.

It’s worth noting the competitor’s recent moves. OpenAI is aggressively pushing its own cognitive depth model, the GPT-5.1 Thinking variant, which is designed to deliberately slow down for complex tasks, echoing Gemini Three’s own Deep Think mode. This suggests that while their distribution architecture differs, their *path* to core intelligence is converging.

Structural Vulnerabilities of the Standalone Model Approach

The competitive vulnerability that Google is targeting stems from the inherent structural limitations faced by models that exist largely outside of a unified, proprietary infrastructure ecosystem.

OpenAI, while leading in the initial scramble, operates with a notable dependence on external partners for mission-critical resources like high-performance computing chips and data center capacity. This reliance introduces dependencies, potential cost escalations, and points of potential friction or supply constraints that a fully vertically integrated operation does not face. For example, the sheer scale of compute needed to power a model like GPT-5.1 required significant external financial commitments and deals, highlighting this dependence.

Furthermore, the reliance on a purely subscription-based revenue model for premium access can create user friction and limit the ability to use pricing strategically to drive adoption. This is in stark contrast to Google’s ability to leverage its existing, highly profitable ad-supported ecosystem to subsidize or aggressively price its AI offerings for maximum market penetration. This foundational difference in operational structure presents a persistent strategic headwind for the competitor—they must monetize quickly to fund the next hardware upgrade, whereas Google can afford to subsidize growth for market share dominance.

Market Reaction and Analyst Sentiment

The immediate reaction to a major AI launch is always felt first in the markets, and the debut of Gemini Three was no exception, although the overall market mood remains cautious.

Investor Optimism Versus Broader Tech Bubble Concerns

The announcement of Gemini Three predictably sent ripples of optimism through the investment community, particularly concerning Alphabet’s stock performance. Analysts are viewing this launch as a crucial validation of the company’s massive capital outlay in the AI sector, offering tangible evidence that their research efforts are translating into superior, commercially viable products that can accelerate user engagement and revenue growth across the entire portfolio.

However, this excitement is tempered by a growing undercurrent of apprehension across the wider technology market regarding the sustainability of the AI investment boom itself. There are ongoing, sober discussions about the potential for an “AI bubble,” a sentiment echoed by Alphabet CEO Sundar Pichai, who acknowledged “elements of irrationality” in the current investment climate.

Analyst Snapshot (November 2025):

While Gemini Three is a major win for Google, no single company is entirely immune to a broader market correction should investor sentiment sour on the sector’s long-term, high-cost trajectory.

Potential for Market Fragmentation and Specialization

One significant potential outcome being discussed by industry watchers is a move away from a single, monolithic dominant model toward a more fragmented, specialized market landscape. The competition between Google’s unified, deeply integrated approach with Gemini Three and OpenAI’s apparent pivot toward specialized cognitive models, like the rumored GPT-5.1 Thinking variant, suggests users may increasingly adopt a multi-model strategy.

In this scenario, a developer might gravitate towards Gemini Three for its superior coding and visualization tools, while a legal researcher might retain a preference for a different model optimized for complex legal document synthesis. This fragmentation, while potentially challenging a single-vendor dominance, ultimately benefits the entire market ecosystem by ensuring that the intense competition continues to drive innovation across specific, high-value use cases.

This means that Google’s focus on end-to-end performance in Gemini Three—from general reasoning to specialized agentic tasks—is its counter-strategy to this fragmentation. By making the core model so adaptable (using low-latency vs. high-reasoning ‘thinking levels’ in the API, for example), they aim to be the best *generalist* platform, forcing competitors to win only in niche specialty areas.

Future Trajectories and Long-Term Vision

The significance of Gemini Three isn’t just what it does today in Search or in the developer tools like Google Antigravity; it’s what it represents for the next five years of AI research.

The Pursuit of Advanced General Intelligence and Robotics

While the immediate impact of Gemini Three is felt in productivity gains, the underlying research goals for Google’s DeepMind division remain focused on the more distant, yet transformative, aspiration of achieving advanced general intelligence (AGI) and its practical application in robotics.

The advancements in native multimodality and complex reasoning demonstrated by this new model are considered crucial stepping stones toward creating AI systems that can perceive and interact with the physical world with the same sophistication they now handle digital information. The ultimate hope is that the cross-pollination effects observed between visual, audio, and textual processing within Gemini Three will provide the foundational architecture needed for machines to interpret and operate within dynamic, unpredictable real-world environments, paving the way for genuine breakthroughs in autonomous systems beyond just the digital domain.

This is why Google’s earlier release of the Gemini Robotics-ER 1.5 model was so significant—it signals that the core model advancements are immediately feeding the next-generation hardware challenge.

Security Posture and Resistance to Adversarial Attacks

As AI models become more deeply embedded in critical infrastructure—from finance to government—their security profile moves from a secondary concern to a primary design constraint. The development team for Gemini Three reportedly placed significant emphasis on enhancing the model’s security measures within this latest iteration.

This involved a concerted effort to bolster defenses against sophisticated adversarial attacks, specifically addressing vulnerabilities like prompt injections and other methods designed to subvert the model’s safety guardrails or extract proprietary training data. By proactively hardening the model against these evolving cyber threats, Google aims to instill a higher degree of trust in enterprise and government adoption.

Actionable Security Insight for Adopters: When moving to the new architecture, pay close attention to the model’s reported improvements in resisting prompt injections. For organizations managing consequential tasks, its resistance to manipulation must be as robust as its intelligence, ensuring responsible deployment across its expanding operational footprint. This commitment to security is what separates a research prototype from a mission-critical enterprise tool. For deeper reading on securing AI deployments, check out this primer on AI security best practices.

Conclusion: The Unavoidable Trajectory of Full-Stack Dominance

Gemini Three, launched on November 18, 2025, confirms a thesis: In the current era of AI, infrastructure dictates destiny. Google’s full-stack supremacy—the tight, synchronized loop between DeepMind research, custom TPU silicon (like the upcoming Ironwood generation), their financial might to absorb R&D costs, and massive distribution channels via Search and the Gemini app—is a structural advantage that competitors like OpenAI, despite their impressive GPT-5.1 releases, find incredibly difficult to counter.

The key takeaways from this seismic launch are clear:

What You Can Do Now (Actionable Takeaways):

The era of the fully integrated AI powerhouse has arrived. Gemini Three is not just a new product; it’s a powerful declaration of strategic intent built on years of owning the entire stack. Are you ready to build on that foundation, or are you still waiting for parts?