The Dual Edge: Navigating Emerging Security Threats and Unforeseen Dangers

With great computational power comes great potential for misuse. The convergence that promises to cure diseases and unlock clean energy also provides unprecedented tools for coercion and systemic harm. As we observe the progress in quantum hardware throughout 2025, we must equally focus on the darker potential that intersects with national security and the very foundations of civic order.

The Looming Specter of Neuroscience-Informed Weaponization

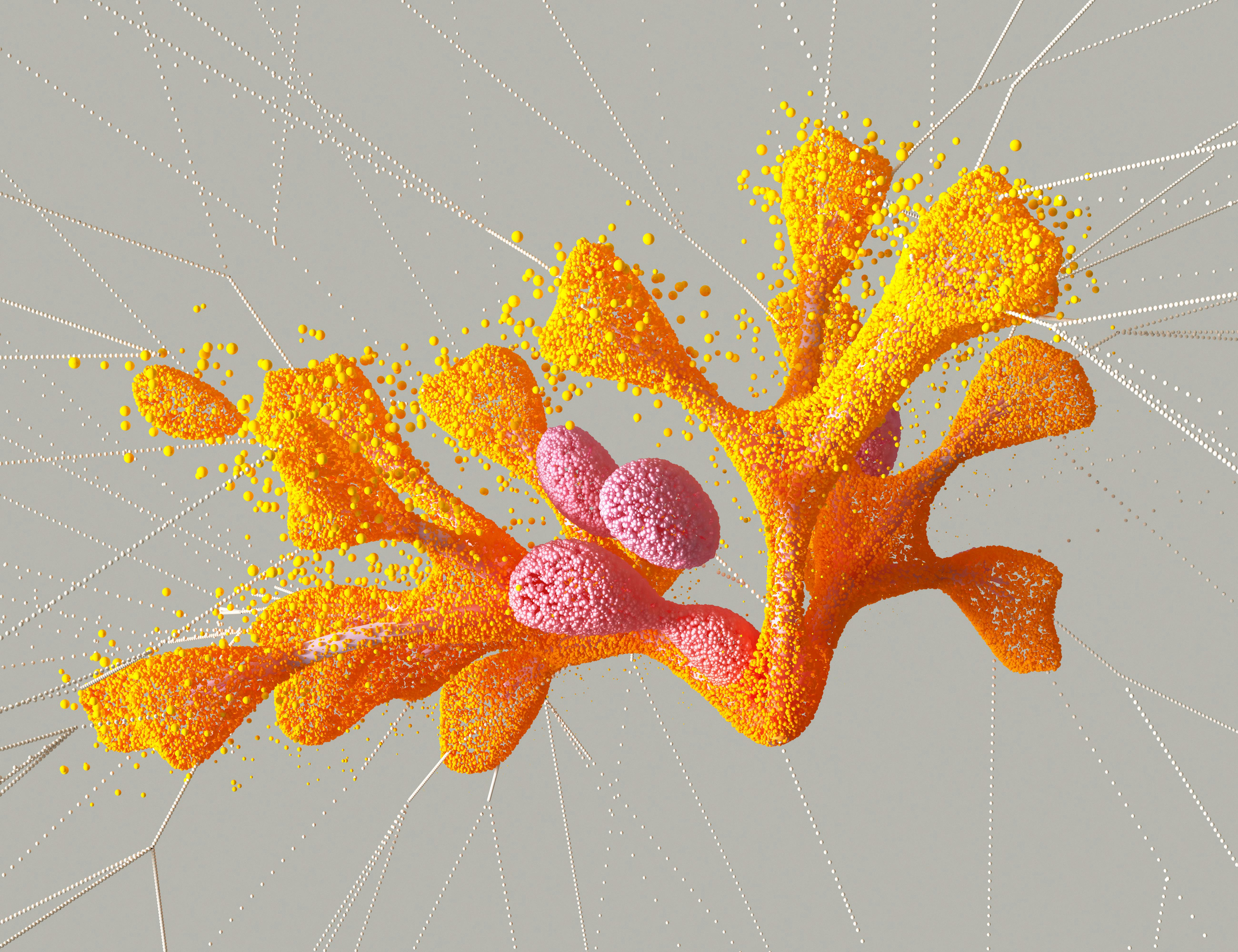

Perhaps the most alarming emergent threat discussed in urgent research circles this year involves the frightening combination of sophisticated AI, advanced pharmacology, and contemporary neuroscience. This isn’t just surveillance; this is about the mind itself becoming a target. Leading researchers are issuing stark warnings about the accelerating feasibility of developing what are being termed “brain weapons”.

The convergence sharpens the ability to model and interact with the central nervous system (CNS) with alarming precision. Experts warn that tools capable of subtly influencing human consciousness, memory function, or behavior are rapidly moving from the realm of speculative fiction into tangible military planning.

“We are entering an era where the brain itself could become a battlefield,” noted a recent warning from Bradford University academics who urge immediate international action. The danger lies in the growing accessibility and precision of tools designed to sedate, confuse, or coerce the CNS.. Find out more about Hybrid quantum-classical AI systems operationalization.

The historical precedent from Cold War programs targeting CNS-acting agents is now amplified by AI’s modeling capabilities, creating tools that could be used for disorientation or incapacitation with danger previously unattainable. This necessitates immediate, coordinated international efforts to establish ethical and legal safeguards, as existing arms control treaties appear ill-equipped to manage this new frontier.

Addressing Algorithmic Bias in Electoral and Judicial Systems

While the threat of weaponized neuroscience is emerging, the dangers of deployed, flawed AI in civic sectors are already visible in 2025. The practical deployment of Artificial Intelligence in sensitive areas—the justice system, bail hearings, and electoral information processing—has laid bare critical vulnerabilities related to ingrained bias.

For example, the infamous COMPAS risk assessment tool, used in courts across the U.S. to predict reoffending, has been shown repeatedly to disproportionately label Black defendants as “high risk” compared to white defendants with similar histories. This is the core problem: algorithms trained on historical legal decisions that reflect systemic prejudice inevitably perpetuate those same injustices.

The situation is mathematically complex. Researchers have proven an “impossibility theorem” showing that *no algorithm* can simultaneously achieve perfect parity across predictive accuracy, equal false-positive rates, and equal false-negative rates when base rates of outcomes differ between groups. This means bias is not an accidental bug; it is an inevitable outcome of deploying these tools without deliberate, rigorous intervention focused on fairness metrics over mere efficiency.. Find out more about Hybrid quantum-classical AI systems operationalization guide.

The practical dangers include:

- Opacity (The “Black Box”): When proprietary systems are used, neither judges nor defendants can truly scrutinize *how* a risk score was generated, raising serious due process concerns—it becomes “an accuser the defendant cannot face”.

- Hallucinations and Falsehoods: In electoral forecasting, AI’s tendency to generate convincing but entirely false information (“hallucinations”) threatens the integrity of information processing that influences civic decisions [cite: Prompt text].

- Feedback Loops: If a biased AI leads to stricter sentencing for a group, that group will show higher future incarceration rates, generating data that reinforces the algorithm’s *original* bias—a self-perpetuating cycle of prejudice.

This has rightly spurred calls for standardized scientific evaluation and transparency *before* these tools influence decisions affecting life, liberty, and democracy. This is a moment where administrative efficiency cannot trump verifiable accuracy and fairness.. Find out more about Hybrid quantum-classical AI systems operationalization tips.

For more on the ongoing debate around regulating these tools, look into the latest guidance on algorithmic justice in government.

The Quantum Race: Investment Momentum and Geopolitical Realities

The stakes are too high for any nation or major corporation to sit on the sidelines. The investment landscape in 2025 reflects this urgency. The global quantum computing market is projecting substantial growth, with estimates showing it could reach between USD 1.8 billion and USD 3.5 billion in 2025 alone. More telling is the venture capital surge: the first three quarters of 2025 witnessed USD 1.25 billion in quantum computing investments, more than doubling the figures from the previous year.

Major institutional players are cementing their commitments. JPMorgan Chase, for instance, announced a massive USD 10 billion investment initiative specifically naming quantum computing as a core strategic technology. Governments worldwide poured in USD 3.1 billion in 2024, largely tied to national security and the race for technological sovereignty.

Vendor Ecosystems and the Battle for Standardization. Find out more about Hybrid quantum-classical AI systems operationalization strategies.

The technological landscape is a fascinating mix of consolidation and intense competition. While large tech giants like IBM and Google continue to push qubit counts—IBM planning a multi-chip system incorporating 4,158 qubits in 2025—smaller, specialized players are capturing market share through performance fidelity. IonQ, for example, was the only quantum company named to the 2025 Deloitte Technology Fast 500, demonstrating revenue growth of nearly 2000% from 2021 to 2024, driven by accelerating platform adoption.

However, as detailed earlier, raw qubit count isn’t the only metric. The focus is shifting to utility, which relies heavily on the hybrid integration discussed previously. The open-source movement, supported by tools like Qiskit and PennyLane, is fighting the potential “Quantum Divide”—the risk that this immense power becomes concentrated only in the hands of a few wealthy entities. The push for open standards like NVQLink, adopted by supercomputing centers internationally, is a direct response to ensuring interoperability as different hardware types (trapped-ion, superconducting, photonic) mature.

Geopolitical Strategy: Securing the Digital Frontier

The stakes in the quantum race are not purely commercial; they are deeply geopolitical. Nations are leveraging this technology not just for R&D but for ensuring future cybersecurity and national competitiveness. The ability to harness quantum computing to potentially break current public-key cryptography is forcing an urgent pivot toward Post-Quantum Cryptography (PQC) across critical infrastructure—a necessary defense in this new era. The race is on to secure data *today* against the cryptographic threats of tomorrow.

If you’re interested in the defensive side of this equation, you should review the latest government advisories on post-quantum cryptography standards.

Preparing for the Quantum-AI Future: Actionable Insights for Leaders

The convergence of quantum computing and intelligence is no longer a future event to monitor; it is a present reality that requires strategic organizational shifts. Leaders in every sector must adopt a proactive, multi-faceted strategy. Waiting for “perfect” hardware means you will miss the early, high-value advantage.

Key Takeaways for Navigating 2025 and Beyond

We’ve covered the rapid operationalization of hybrid systems and the serious ethical shadows these technologies cast. Here are the most crucial, actionable steps you can take right now:

- Build Hybrid Fluency, Not Just Quantum Purity: Focus talent development on the middleware and orchestration required for hybrid quantum-classical workflows. The immediate ROI is in using the classical side of the system smarter, informed by quantum possibilities.

- Demand Algorithmic Auditing: For any AI deployed in sensitive civic or high-stakes decision-making processes (HR, Finance, Legal), mandate verifiable, standardized audits that test explicitly for disparate impact across demographic groups. Demand transparency into training data and decision logic; refuse to implement “black box” systems where rights are at stake.

- Prioritize Defensive Cybersecurity Now: Assume that cryptographically significant quantum computers are a five-to-ten-year prospect, not a twenty-year one. Begin the migration and inventory process for data migration to PQC protocols immediately, as this transition takes years to implement across large organizations.

- Engage Ethical Foresight: Establish internal working groups—not just engineers, but ethicists, legal counsel, and social scientists—to monitor dual-use technologies, especially those at the AI/Neuroscience interface. Treat emergent ethical risks with the same rigor as you treat cybersecurity threats.

The pace of change is staggering. The quantum ecosystem is maturing rapidly, with standardized hardware ecosystems beginning to emerge. Ignoring this convergence is no longer a neutral choice; it is a choice to lag behind in innovation and a choice to be unprepared for the new security landscape.

Conclusion: The Era of Calculated Computation. Find out more about Neuroscience-informed weaponization emerging threats insights information.

The story of Frontier Technology Convergence in late 2025 is one of profound duality. On one hand, we have tangible, demonstrable speed-ups in materials science and drug discovery, powered by the symbiotic relationship between classical logic and quantum probability. We have the engineering solutions—like NVQLink—bridging the gap between noisy quantum devices and powerful GPUs, moving us from a “hype cycle” to a commercial reality.

On the other hand, we are faced with urgent, deeply unsettling ethical and security challenges. The looming threat of neuroscience-informed weaponization demands proactive global governance to protect the human mind. Simultaneously, the biased outputs of deployed AI systems in our courts and civic spheres threaten to erode the very concept of fairness under the law, demanding rigorous, human-centered accountability.

The lesson for all of us navigating this world—whether you are a researcher, a CEO, or simply a concerned citizen—is clear: this technology requires calculated computation. It demands that we apply our most rigorous analytical skills not just to the physics of a qubit, but to the ethics of its application. The future is being built right now, node by node, in the hybrid systems that are just beginning to show their power. The key to thriving in this era is preparation grounded in awareness of both the unparalleled promise and the critical perils.

What steps is your industry taking *today* to prepare for cryptographic collapse or to ethically govern your own deployed AI systems? Share your thoughts in the comments below—the conversation around responsible AI governance is the most important one we can have right now.

For further background on the security implications of these converging fields, consult this overview of AI security threats.