The Second Essential Winner: The Engine of Accelerated Computing—Nvidia’s Unwavering Reign

No discussion about artificial intelligence compute in 2026 is complete without acknowledging the company that essentially defined the hardware standard for the current generation of deep learning. Despite the market rotating away from some of the most speculative “picks and shovels” plays of the prior year, the fundamental dominance of this incumbent hardware architect remained absolutely intact. When you are building the engines that run the world’s most complex models, you hold a position of almost unassailable power.

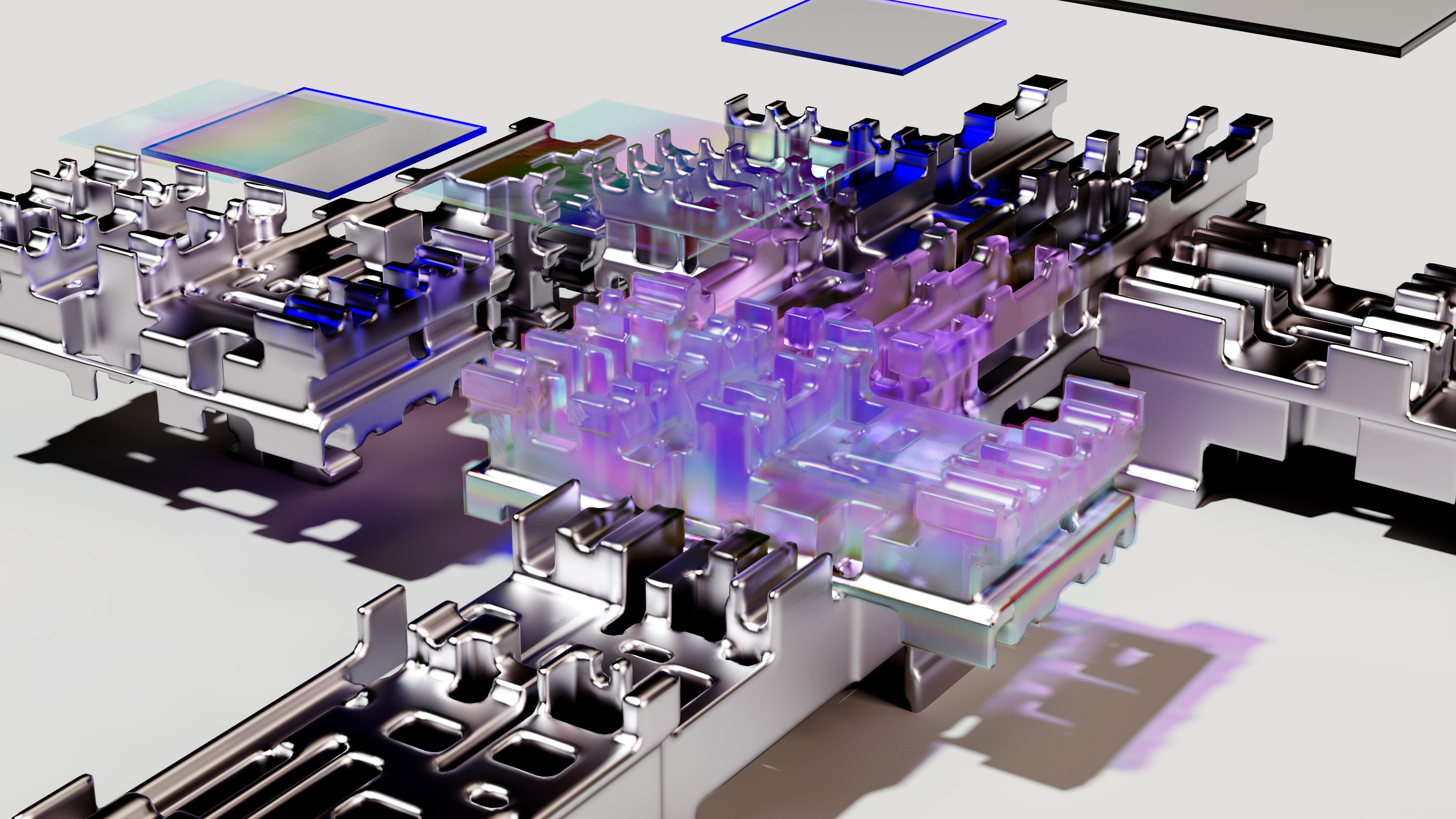

The Continued Supremacy of the GPU Ecosystem and Product Roadmaps

Nvidia, the architect of the Graphics Processing Unit (GPU) architecture that became synonymous with modern machine learning, maintained its pivotal role throughout late 2025 and into the first quarter of 2026. Their integrated ecosystem of hardware (the GPU silicon), software (like CUDA), and development tools created a moat deeper than any we’ve seen in modern chip history. While concerns circulated about the potential for customer lock-in or the rise of custom silicon (like Google’s TPUs or new specialized accelerators) to erode their market share over the very long term, the short-to-medium term reality in 2025 and now in early 2026 was stark: their latest products—the Blackwell generation systems—were still largely sold out, and their installed base was running at 100% utilization across every major data center globally. Hyperscalers were, and still are, desperate for capacity.

The real story, however, isn’t just about keeping up; it’s about staying ahead. The sheer scale of investment being deployed is staggering. For context, the five largest US cloud and AI infrastructure providers—Microsoft, Alphabet, Amazon, Meta, and Oracle—have collectively committed to spending between $660 billion and $690 billion on capital expenditure in 2026, nearly doubling 2025 levels. The vast majority of that capital is directed toward AI compute, data centers, and networking. This spending validates Nvidia’s strategy, as their hardware remains the default choice for training the next generation of massive models.

From Sold Out to Scale: Navigating Utilization Rates and New Hardware Launches

The key to Nvidia’s continued success—and the reason the market has been willing to overlook any temporary valuation stretching—was its aggressive, clockwork product cycle. They are not resting on the success of Blackwell; they are already signaling the capture of the *next* wave of spending through superior performance metrics, such as enhanced energy efficiency per computation.

Here is the forward-looking roadmap that keeps hyperscalers pre-paying for capacity:. Find out more about Nvidia GPU ecosystem dominance machine learning.

This relentless innovation means that as hyperscalers spend billions—a collective figure nearing $700 billion in 2026 alone—a significant portion of that capital is earmarked to upgrade to the next generation of Nvidia’s specialized hardware just to maintain a competitive edge against their peers. Their ability to continuously raise the bar for GPU architecture performance means they are the indispensable partner in this infrastructure race.

Actionable Takeaway for Investors: The moat isn’t just the chip; it’s the ecosystem. Watch for how successfully they integrate their new CPU and DPU offerings alongside the GPU, as system integration is now valued as highly as raw silicon fabrication gains.

The Third Essential Winner: The Infrastructure Builders Securing Long-Term Contracts

Beyond the semiconductor design and fabrication layers, the physical construction and hosting of the computational power presented another incredibly lucrative avenue for investors seeking direct exposure to the infrastructure spending boom. This segment often comes with contracts offering superior revenue stability—the holy grail in a high-growth, high-capex sector.. Find out more about Nvidia GPU ecosystem dominance machine learning guide.

Pivoting to AI: The Transformation from Crypto Mining to Data Center Hosting

A notable category of winners included companies that had successfully pivoted their core business model to serve the immediate, burgeoning need for physical data center capacity optimized for artificial intelligence workloads. The story of this pivot is one of remarkable strategic alignment. One company that exemplified this shift is Applied Digital. Having previously focused on infrastructure for cryptocurrency mining—a sector notorious for its volatility—this entity successfully rebranded and repurposed its assets to become a specialized provider of co-location and hosting services tailored for AI compute clusters.

This pivot aligned their physical footprint directly with the hyperscalers’ urgent need for specialized, high-density power and cooling solutions. They aren’t just building generic server farms; they are engineering specialized environments—high-power density campuses designed from the ground up to handle the thermal output of thousands of high-end GPUs.

This transition has translated into concrete, verifiable contracts as of February 2026:

The urgency of the need is so great that these capacity providers are effectively securing revenue streams years before construction is even completed. This is a direct play on the *commitment* to AI, not just the immediate deployment.

The Security of Subscription Revenue: Locking in Multi-Year Commitments

What made this positioning particularly attractive was the nature of the contracts secured. Think about it: most semiconductor sales are transactional. A hyperscaler buys chips, deploys them, and when they need more, they place a new order—often subject to availability. In contrast, these specialized data center hosting arrangements are different. They are long-term capacity reservations.

This stability is precisely what caught the eye of savvy capital allocators. Unlike transactional hardware sales, these multi-year commitments offer revenue visibility that functions almost like a subscription service, even though the end product is physical space and power. This visibility, coupled with the reported explosive year-over-year sales growth that these companies are experiencing as their first phases come online, offers a compelling risk-adjusted profile: a direct play on the massive AI infrastructure spending boom with the financial stability of a utility-like service agreement. Many of these firms are now on the verge of achieving (or have already achieved) GAAP profitability, fueled entirely by these forward-looking, committed contracts.

Practical Tip for Evaluating Infrastructure Plays: Don’t just look at current bookings; look at the *average duration* of those contracts and the *Power Usage Effectiveness (PUE)* rating of the facility. A long-term lease (15+ years) in a power-efficient, high-density facility (like the 1.18 PUE mentioned for Polaris Forge 2) signals superior long-term operational leverage.

Broader Opportunities Beyond the Primary Three: A Look at the Extended Ecosystem

While the immediate, most heavily discussed winners were those providing the foundational hardware (GPUs) and the physical space (Data Centers), the capital deployment is so vast—approaching USD 90 billion in global AI infrastructure market size in 2026—that it created significant tailwinds for numerous other specialized technology providers across the data stack. Savvy investors recognized that the investment flood would lift nearly all well-positioned boats within the AI supply chain.

Memory Makers Riding the Data Wave: The Crucial Role of High Bandwidth Components

If the GPU is the engine, High Bandwidth Memory (HBM) is the fuel line—and right now, the fuel line is the bottleneck. Data movement and storage proved to be just as critical as processing power. The complex calculations required by large models, especially as they move toward multi-step reasoning, necessitate incredibly fast, low-latency access to memory. This demand created a self-sustaining “memory super cycle” driven almost entirely by artificial intelligence requirements.

Companies specializing in High Bandwidth Memory (HBM), a specialized type of DRAM, have seen their revenue streams accelerate dramatically. As data centers scaled up their GPU farms throughout 2025 and continue to do so in 2026, the demand for faster, more capacious memory modules for use both directly on the accelerator package and within server RAM grew exponentially. The introduction of the Rubin platform later this year, for example, is explicitly dependent on the arrival of HBM4.

This is not a minor upgrade; it’s foundational. It benefits market leaders in this niche who were capturing a significant share of the global memory market because their fabrication processes were advanced enough to meet the stringent density and speed requirements of the newest accelerators. For those looking for leverage in the chip space outside of the main GPU designers, monitor the HBM packaging specialists—they are the quiet enablers.

Key Insight: The memory hierarchy is now the primary constraint determining when the next generation of compute clusters can be deployed. Understanding High Bandwidth Memory supply trends is as important as tracking GPU availability.

Networking Infrastructure: Connecting the Massive Compute Clusters

Finally, consider the sheer scale of modern artificial intelligence clusters. These aren’t just a few servers huddled together; they are sprawling architectures comprising thousands of interconnected processors that must behave as one cohesive unit. This scale places an immense premium on the networking hardware that allows these processors to communicate efficiently. Slow networking creates bottlenecks that negate the speed of the most advanced chips—it’s like putting a Formula 1 engine in a car with bicycle tires.. Find out more about Nvidia GPU ecosystem dominance machine learning overview.

Consequently, companies providing high-performance, low-latency Ethernet switches and the associated software layers to connect these sprawling clusters were positioned for substantial growth. While the GPU designer often supplies the proprietary interconnect (like InfiniBand), a major, profitable trend in 2025 was the increasing adoption of high-speed, open-source Ethernet in the back-end AI networks—a direct move by hyperscalers to prevent vendor lock-in and increase choice.

This competition is a massive tailwind for Ethernet networking players. The total addressable data center networking market is projected to hit around $120 billion by 2028. As companies roll out 400G and 800G platforms now, they are already planning for 1.6T platforms in the near future, directly tying their growth prospects to the continued, multi-year capital commitment from the hyperscalers. Their technology became the essential medium for ensuring that the billions invested in compute could actually be harnessed effectively across the entire data center architecture, making them a quiet, but powerful, winner in this great capital deployment.

Checklist for the Network Layer: Look for providers whose high-speed Ethernet platforms are being deployed in the “back-end” (server-to-server) AI fabric, as this is where the biggest performance payoff—and therefore, the highest demand—currently resides.

The Extended Ecosystem: Where Investment Flow Creates Secondary Winners

The capital deployment isn’t just concentrated; it’s so vast that it creates massive, indirect opportunities. When the five largest US tech companies are projecting nearly $700 billion in infrastructure spending for 2026, it lifts all boats equipped with the right specialized parts. This flood of cash demands excellence across the entire stack, from the power delivery systems to the cooling apparatus.

Power and Cooling Specialists: The Unsung Heroes of Thermal Density

It’s easy to focus on the silicon, but that silicon generates ferocious heat. Older data centers simply cannot handle the thermal density of modern AI racks, which can exceed 100 kilowatts per rack, compared to historical averages of 10-20 kW. This creates a massive opportunity for companies specializing in two areas:. Find out more about Data center hosting specialized for AI compute clusters definition guide.

This is where you find plays that blend high-tech specialization with critical utility-like stability. Securing power and the right cooling strategy is a multi-year decision, often involving multi-decade contracts, mirroring the revenue stability seen in the hosting segment.

Software and Orchestration: Managing the Complexity

When you have thousands of GPUs communicating across thousands of switches, the management software layer becomes a high-value proposition. It’s no longer enough to have fast hardware; you need intelligent orchestration software to dynamically allocate resources, manage job scheduling across heterogeneous hardware (older generations mixed with the newest ones), and monitor performance metrics like token-per-watt efficiency.

Look at the demand for tools that manage the entire AI factory pipeline. Whether it’s software for optimizing the complex interplay of memory, network fabric, and compute for massive model training runs or platforms designed for governance and deployment across hybrid cloud environments—the tooling market is expanding rapidly. The investment in hardware is forcing a corresponding, mandatory investment in the AI software stack to unlock the value of that hardware.

Conclusion: Mapping the Permanent Infrastructure of Artificial Intelligence. Find out more about Applied Digital pivot from crypto mining to AI infrastructure insights information.

The investment story of 2025 carried into 2026 is clear: the massive capital deployment into artificial intelligence is not ephemeral; it is building permanent, high-density infrastructure. The market has sorted itself, revealing three core strata of essential winners:

The reality today, February 13, 2026, is that demand for compute capacity is supply-constrained across the board. This imbalance is what fuels the premium for those who can deliver performance *now* (Nvidia’s current chips) or those who can guarantee power and space *soon* (the infrastructure builders). Every dollar spent by the hyperscalers is an implicit endorsement of this physical and architectural structure.

Your Next Step: Don’t chase the hype on the next *software* layer that might change tomorrow. Instead, examine the long-term contracts, the power agreements, and the silicon roadmaps that are locked in for the next five years. Those are the companies building the digital concrete foundations of our AI future. What part of this resilient infrastructure are you positioned to benefit from?