The Core Thesis: Reallocating Capital from People to Processing Power

The financial story animating the current corporate environment is one of stark resource migration. It is a fundamental, non-negotiable shift in the cost structure of a dominant technology platform. The massive, multi-year commitment to secure the silicon and build the data centers necessary for advanced artificial intelligence—a commitment now estimated to push Q1 2026 CapEx toward $35 billion—cannot be funded by simply printing money or absorbing the entire cost through current operating income. Something has to give, and that “something” is the legacy cost structure.

The Aggressive Cost Structure Pivot

The core mechanism is beautifully, if brutally, simple from a spreadsheet perspective: reduce the fixed and variable costs associated with human capital to partially offset the massive capital expenditure on fixed, long-lived assets. The salary and benefits savings realized from eliminating over 15,000 roles, including a sharp reduction of nearly 9,000 employees in a single summer month, directly reduces the pressure on the bottom line created by the $80 billion build-out. This isn’t about replacing one worker with one AI tool; it’s about eliminating entire administrative layers and legacy roles that the new, automated, capital-intensive intelligence capabilities render redundant or simply unneeded in their previous form. This aggressive rebalancing act is precisely how the company aims to fund its foundational initiative without severely eroding free cash flow in the near term—a dynamic financial analysts are scrutinizing with intense focus.

The Death of Headcount as a Growth Metric

For decades, especially in the tech sector, the primary indicator of market aggression and expanding service capacity was simple: headcount growth. More engineers, more sales staff, more support personnel—that meant expansion. That entire metric has been completely recalibrated. The new benchmark for market aggression is the sheer, tangible volume of capital poured into physical and virtual AI infrastructure. The scale of this commitment, mirrored by rivals, signals that the physical presence—the computational footprint of data centers—now correlates directly with market leadership potential in the AI domain. This shift in corporate spending benchmarks means that future performance evaluations will be less about the size of the organization on paper and more about the density and sophistication of the computing power under its roof. If you’re tracking enterprise spending trends, you must substitute server racks for cubicles in your mental model of growth.

The Human Cost of Structural Change

While finance models cheer the cost discipline, the human element remains the most significant operational hurdle. CEO Satya Nadella has publicly acknowledged the difficulty, noting that the job eliminations are “weighing heavily” on leadership. This isn’t just boilerplate HR language; it’s a direct confrontation with cultural stability. How do you maintain the innovation velocity required for an $80 billion initiative when the remaining workforce is understandably concerned about their own roles? Navigating this tension—pushing for radical technological advancement while simultaneously managing the morale and cultural fallout—is the defining operational challenge of this era. For leaders, this means doubling down on transparency regarding future roles and focusing on reskilling over replacement where possible, even as the structural cuts continue elsewhere. To understand the long-term impact on corporate culture, one must study the concept of navigating organizational friction.

Investor Perception: Navigating Volatility from CapEx Signals

The market has reacted exactly as one might expect when a company announces it is simultaneously spending an estimated $80 billion on infrastructure while shedding thousands of workers: with volatility. The narrative of massive future payoff is powerful, but the immediate financial mechanics—the dilution of free cash flow and the extended timeline to see returns—introduce tangible, short-term risk.. Find out more about Microsoft layoffs simultaneous with $80B AI capital expenditure.

The Whiplash of Short-Term Trading

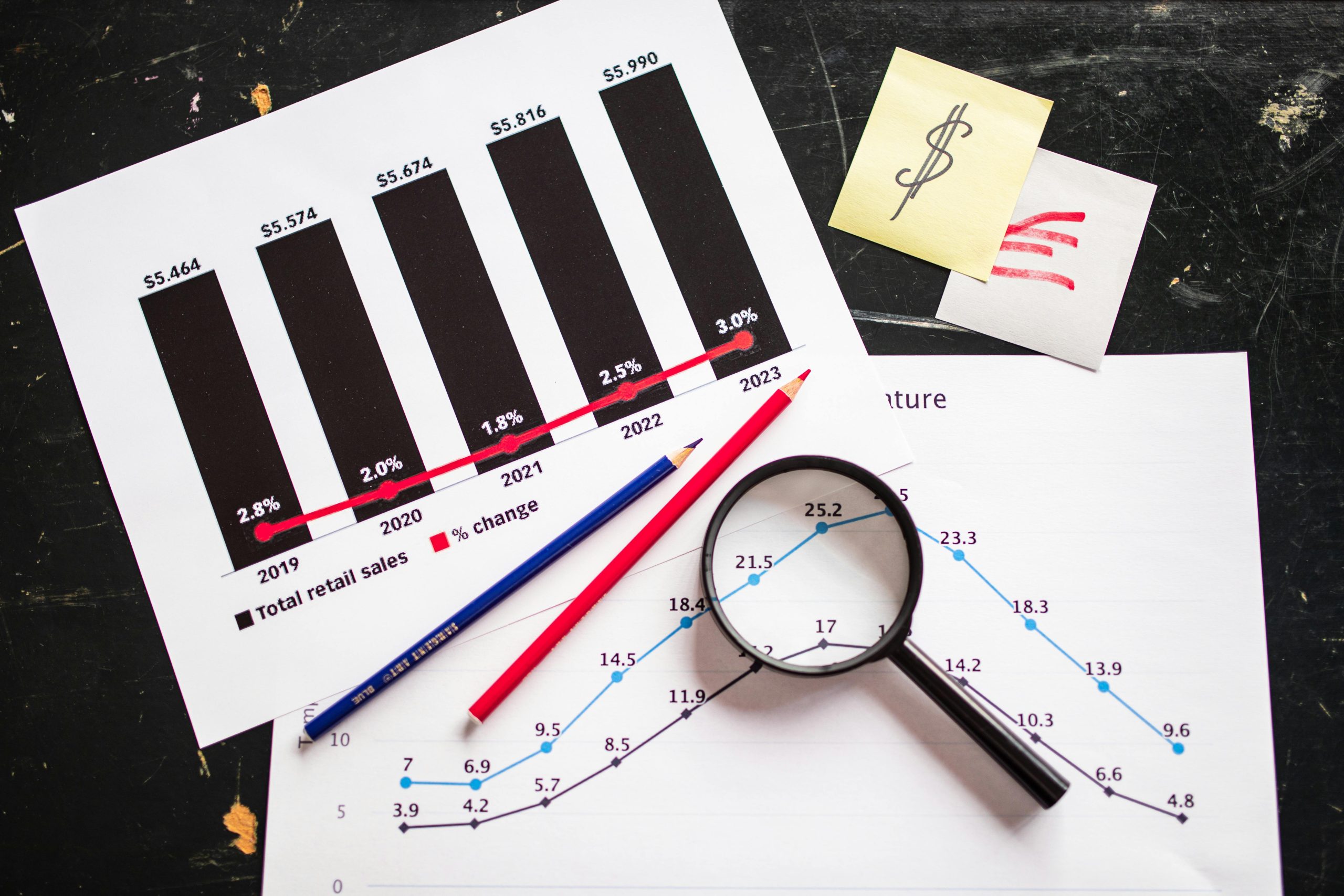

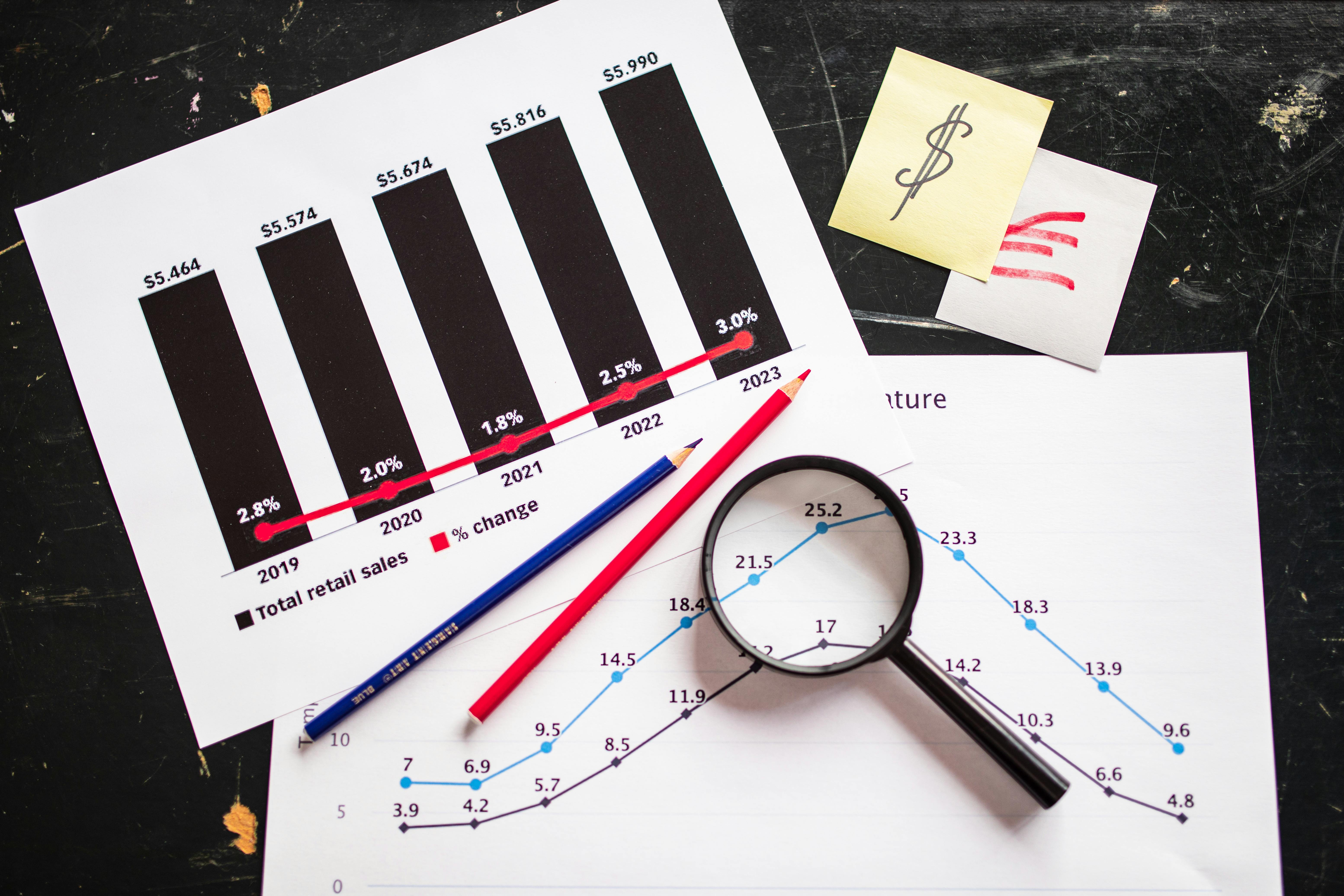

If you’ve watched the stock chart recently, you’ve seen the pattern. Major dips often follow any formal announcement signaling forward-looking capital expenditure requirements for the coming fiscal year. Investors believe in the AI race, but they are increasingly sensitive to the immediate balance sheet impact. It’s the classic tension: do you sacrifice near-term cash flow for long-term market dominance? The recent Q4 2025 earnings showed a company crushing expectations with $76.4 billion in revenue and Azure surging 39% year-over-year. Yet, even that good news was tempered by guidance for continued, massive capital investment. This signals that while the underlying business health is extremely strong—with Azure’s annual revenue topping $75 billion—the cost of *staying* dominant is high, causing momentary skittishness among traders focused on quarterly metrics.

Anchoring Value with Discounted Cash Flow

Long-term investors, however, are taught to look past the daily jitters. The fundamental anchor for valuation remains the long-term Discounted Cash Flow (DCF) projection. When analysts run conservative DCF models stretching out ten years, projecting the cash flows generated by this AI expansion, the resulting intrinsic value suggests the current trading price is sitting at a measurable discount. In late 2025, the average analyst price target hovered around $628 to $629, implying a potential upside of nearly 30%. This analysis is the rationale for long-term holders: the investment made today in infrastructure and restructuring is expected to generate outsized, durable cash flows that justify a significantly higher price later.

Bear Case: The Risk of Over-Pricing the Future

The skepticism from the “bear case” narratives is not baseless, and it centers squarely on valuation risk. If the company’s price-to-earnings ratio inflates too high relative to actual growth realized, or if the highly anticipated revenue acceleration from embedded AI tools fails to materialize at the aggressive, priced-in rates, a correction is inevitable. The market is pricing in near perfection on execution while managing intense internal friction from the restructuring. You have to ask: can you successfully deploy $80 billion in complex infrastructure *and* flawlessly execute a cultural transformation simultaneously? The bear case says the answer is harder than the bulls admit.

Bull Case: Premium Multiples for AI Primacy

On the flip side, the most confident bulls believe the market is still too conservative. They argue that the sheer scale of AI integration across every product—from cloud to productivity suites—will drive revenue and margins so high that they will justify a market valuation far exceeding current estimates. In this scenario, the massive upfront spending isn’t a risk; it’s the non-negotiable, necessary down payment for securing a multi-decade runway of sustained, double-digit revenue expansion across all major business segments. If the AI adoption curve is as steep as proponents suggest, the company is positioning itself to command premium multiples because it will own the essential computational platform.

The Core Revenue Engines Fueled by Artificial Intelligence. Find out more about Microsoft layoffs simultaneous with $80B AI capital expenditure guide.

The $80 billion investment isn’t altruistic; it is a targeted deployment designed to supercharge specific, proven revenue streams. The infrastructure build-out is specifically engineered to feed the machines that print money right now, while planting seeds for tomorrow’s growth.

Azure Cloud Services: The Immediate Financial Waterfall

The cloud computing division, Azure, is the immediate, quantifiable beneficiary. As of late 2025, its financial reports clearly demonstrate that a substantial portion of its growth surge—the 39% leap in Q4—is directly attributable to customer consumption of high-powered AI and machine learning services hosted on the platform. This is the most direct translation of CapEx into revenue: the enterprise client needs AI capacity *today* to integrate it into their own business, and they pay top dollar for access to that specialized compute power. This relationship is the bedrock of the financial rationale.

Monetizing Productivity Software with Embedded Copilots

Arguably the most visible part of the monetization machine is the integration of advanced AI assistants, universally branded as “Copilots,” into ubiquitous software like Microsoft 365 and GitHub. This is the transition from a simple subscription model to a true value-added, intelligent service model. The projection for incremental revenue tied specifically to the Copilot licensing tier is measured in billions of new annual dollars by the end of the current fiscal year. This strategy shifts the entire perceived value of the productivity stack, moving it from an operational cost to an indispensable productivity multiplier. For actionable advice on maximizing internal ROI from these tools, leaders should consult best practices on measuring AI productivity return.

- Azure AI Services: High-margin consumption based on GPU hours for training and inference.

- Copilot Licensing: Tiered monetization adding a substantial premium layer to existing subscription revenue.

- Developer Platforms: New revenue from specialized platforms and services catering directly to AI developers.

Proprietary Models vs. Partner Ecosystem Advantage. Find out more about Microsoft layoffs simultaneous with $80B AI capital expenditure tips.

The strategy to secure revenue is intentionally two-pronged: develop internal, proprietary AI models while simultaneously supporting and integrating the leading third-party models from key partners. This layered approach is critical for financial resilience. It ensures that the company captures value from the infrastructure layer regardless of which specific model architecture—whether internal or external—ultimately achieves broader market dominance. The infrastructure becomes the essential, non-substitutable host layer for nearly all significant AI workloads, a masterstroke in long-term platform strategy.

Strategic Alliances: Locking in the Ecosystem with Compute Power

In the high-stakes global AI race, infrastructure is king, but alliances are the moat. The strategy involves more than just building data centers; it means using that future capacity as leverage to secure long-term commitments from the key players building the AI applications.

The Reciprocal Power of Multi-Billion Dollar Commitments

The partnership ecosystem is built on reciprocal commitments. Leading external AI developers, those pushing the boundaries of generative AI, are making multi-billion dollar agreements to exclusively utilize this company’s cloud platform for their immense training and inference needs. When a key partner pledges billions in capacity purchases, it effectively guarantees a massive volume of future, high-margin revenue for the cloud division. This certainty of demand is what underpins the financial viability of the initial $80 billion infrastructure outlay. It turns a speculative build into a pre-sold capacity expansion. This concept is closely related to understanding cloud economics for next-generation compute.

Securing the Supply Chain Through Hardware Alignment

The strategic alignment extends right down to the physical silicon. By investing strategically and forming deep partnerships with leading chip manufacturers—the makers of the specialized GPUs essential for AI processing—the company secures a prioritized supply chain. In an environment where the most advanced components are constrained, having a guaranteed, ahead-of-rivals allocation advantage dictates the speed at which data centers can be deployed and, consequently, the speed at which revenue can be recognized. This is a pure example of defensive financial maneuvering executed years in advance.

Infrastructure Lock-In for Market Share Dominance

The combination of equity investment, deep model integration, and massive compute purchasing agreements creates a powerful “lock-in” effect for key ecosystem players. The goal is to establish this platform as the default, non-substitutable choice for the world’s most resource-intensive and pioneering artificial intelligence work. This strategy reinforces the long-term utility and, crucially, the demand floor for its cloud services, making it incredibly difficult for customers to ever contemplate switching to a competitor, even if those competitors offer slight short-term price advantages. This infrastructure lock-in is vital for the long-term DCF models to hold true.

Operational Back-End: Taming Unpredictable AI Costs. Find out more about Microsoft layoffs simultaneous with $80B AI capital expenditure strategies.

While the high-level strategy involves massive spending, the granular reality of running AI workloads presents a new set of financial headaches for the customers—and eventually for the company’s own operational efficiency teams. Legacy financial management tools are breaking under the strain.

The Enterprise Challenge of Unpredictable Scaling

For enterprise customers paying for cloud services, AI is fundamentally different from traditional computing. Spinning up a virtual machine is predictable; the cost is fixed by the provisioned time. Running a generative AI model is not. Costs scale based on token usage, complexity of prompts, and the sheer iterative nature of development, leading to significant “forecast variance” that blows past initial budgetary allocations. This unpredictability means that the savings generated by the company’s internal layoffs must be protected against massive, unexpected customer consumption spikes, which is why their own capacity build must be so aggressive.

The Necessity of New Financial Operational Metrics (FinOps 2.0)

This new cost reality has rendered old FinOps frameworks obsolete. Forward-thinking enterprises and internal teams are rapidly adopting new Key Performance Indicators specifically tailored for generative AI consumption. These metrics are granular and usage-based, focusing on insights that finance departments have never needed before:

- Tracking the cost associated with every single processed token.

- Calculating the exact proportion of total cloud expenditure dedicated solely to AI functions versus traditional workloads.

- Precise cost allocation between the expensive, up-front model training phase and the real-time inference activities.

- CapEx Efficiency: Is the next $30 billion in CapEx buying capacity that is utilized faster than the previous tranche?

- Copilot Adoption Velocity: Are enterprise rollouts shifting from pilot programs to organization-wide mandates, signaling true usage inflection?

- Talent Retention in Key Areas: Are the specialized AI engineers and data center experts staying engaged despite the broad corporate turbulence?

If you want to survive this new environment, understanding these modern AI FinOps metrics is non-negotiable for CFOs everywhere.

Governance Pitfalls: Licenses and Hidden Costs

Beyond compute, there are governance and administrative expenses piling up. The widespread rollout of high-cost software licenses, like the various Copilot tiers, is creating allocation waste. Enterprises are finding they provision licenses broadly across departments without a measurable system to track actual usage or determine the ROI per user. This translates directly to wasted expenditure that management must control through stricter provisioning policies. Furthermore, the *hidden* long-term costs—the colossal storage and energy requirements for training datasets—are becoming major components of the total cost of ownership that can no longer be swept under the rug.

Geopolitical Positioning and Corporate Responsibility

This $80 billion investment is not made in an economic vacuum. It is deeply intertwined with national strategy and the responsibility to manage the immense externalities that come with such large-scale infrastructure deployment.

Aligning Private Capital with National Competitiveness

The deliberate choice to allocate over half of that colossal investment to domestic data center construction is a clear alignment of private corporate strategy with broader national interests in maintaining technological supremacy. By ensuring a significant portion of the world’s most advanced AI infrastructure resides within its borders, the company is directly contributing to national capacity for innovation and economic competition. This makes the investment less a pure corporate risk and more a strategic national asset.

Framing AI as the New Foundational Utility

Executive leadership has been masterful in framing this entire revolution. They aren’t just building software; they are building what they view as the next foundational utility, comparing AI’s potential impact to the historical shift brought about by electricity as a general-purpose technology. This narrative elevates the company’s massive capital outlay from a mere corporate endeavor to a necessary, foundational building block for the next generation of economic structure and societal productivity.

The Energy Dilemma: Responsibility and Novel Power Solutions. Find out more about Reallocation of operational budget from human resources to AI infrastructure definition guide.

Running these power-hungry facilities demands an equally aggressive strategy on the environmental and energy front. The deployment must adhere to stated Environmental, Social, and Governance (ESG) commitments, necessitating the integration of carbon-negative technologies and securing massive amounts of renewable energy. But the scale is so vast that it’s forcing exploration into genuinely novel power solutions. There are specific initiatives being discussed, for instance, that involve plans to potentially restart a former nuclear power facility to provide a dedicated, high-capacity, low-carbon energy source specifically for powering these next-generation AI data centers. When you have to consider nuclear power to run your new office tools, you know the scale of the technological shift is real.

The Long-Term Trajectory and Valuation Debate

For those who weather the short-term volatility, the ultimate question remains: does the math work out? Can the aggressive capital spending ultimately generate the growth necessary to justify the high market expectations?

Consensus: Strong Buy, Significant Upside

Despite market nervousness surrounding the capital outlay, the broad consensus on Wall Street as of November 24, 2025, remains overwhelmingly positive. The stock carries a consensus rating of “Strong Buy” from analysts, with targets ranging up to $730. The median one-year target implies nearly 30% upside from current trading levels. This confidence is rooted in the expectation that the company will successfully execute its AI strategy over the next 12 to 18 months.

The Free Cash Flow Multiplier Effect

The foundation of this long-term conviction is the projected trajectory of free cash flow. Analysts forecast that the current annual figure will more than double by the end of the decade. This projection is the direct result of high expected margins once the initial infrastructure depreciation subsides and the sustained revenue growth from cloud and Copilot adoption takes hold. For a view on what a doubling of cash flow means for wealth management, check out the analysis on projected free cash flow growth trajectories.

Anticipating the Multi-Year Horizon for ROI

The most important actionable insight for investors is the timeline. The return on this $80 billion infrastructure investment will not be instant or linear. This foundational spending is a multi-year proposition designed for market share dominance and long-term efficiency gains. Short-term quarterly earnings volatility, driven by high upfront costs and depreciation charges in the first few years of deployment, must be anticipated and accepted by anyone holding for the long term. The current price is a bet on 2028 and beyond, not next Tuesday.. Find out more about Short-term stock volatility reaction to Microsoft massive CapEx signals insights information.

Conclusion: The Price of Staying at the Frontier

Financial engineering, in this context, is less about clever accounting and more about hard-nosed resource warfare. The strategy is clear: shed legacy operational costs (headcount) to fund the necessary capital expenditure ($80 billion) required to own the AI platform. The results are already showing—Azure is surging, and Copilot is rapidly monetizing productivity software.

The key takeaway is that the current corporate environment demands an acceptance of this paradox. You cannot lead the next technological wave without both aggressive spending and aggressive optimization of the existing structure. The short-term pain of restructuring and CapEx surprises is the necessary tax for securing the projected multi-year, double-digit revenue expansion. The market, for now, seems willing to pay that tax, as evidenced by the “Strong Buy” consensus.

Actionable Insight for Leaders and Investors: Do not judge the success of this strategy on next quarter’s operating margin alone. Instead, watch for three leading indicators:

The transformation is underway, and it is both brutal and brilliant. It forces us all to fundamentally rethink what truly drives value in the cloud and AI-first economy.

For a deeper dive into the specifics of cloud capacity management, examine our analysis on global data center supply chain dynamics. How do you see this dual strategy playing out in your industry? Share your thoughts below—we want to hear how this fundamental financial rebalancing is affecting your corner of the tech world.