The Cultural Cost: Knowledge Silos and the New Intellectual Covenant

The final consideration involves the broader consequences on employee culture. Divestiture often creates winners and losers, and knowledge consolidation can forge new, harder-to-break silos between those who *manage* the AI and those who *consume* it.

The Emergence of the ‘Model Elite’

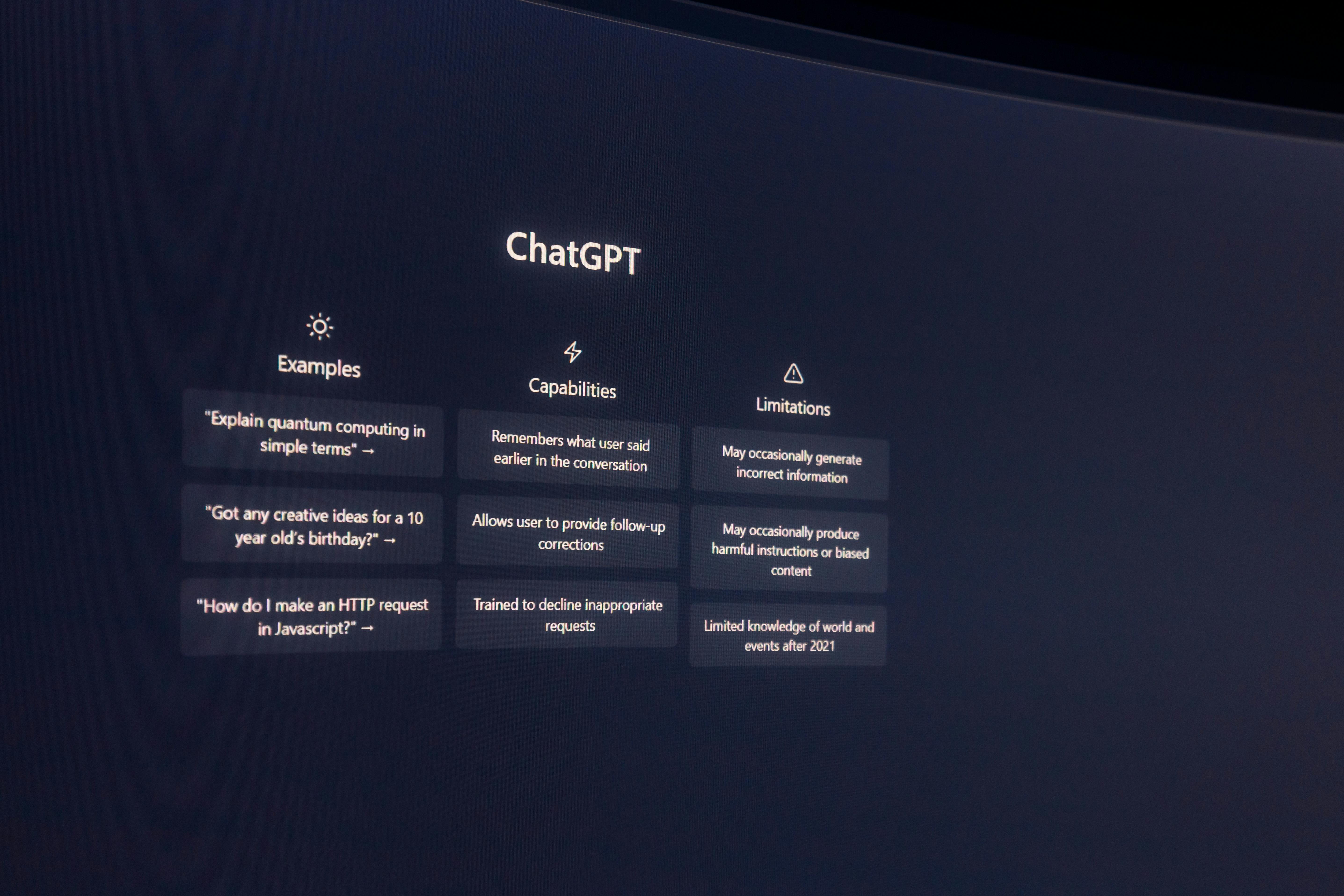

In the old structure, silos were based on departmental function or geography. Now, a new, potentially more powerful silo is forming: the ‘Model Elite’—the small group of data scientists, prompt engineers, and knowledge architects who understand the proprietary system’s inner workings, biases, and governance structure.

Meanwhile, the vast technical workforce is relegated to being users, dependent on a corporate black box owned by a select few entities—a reality that feels antithetical to the spirit of open knowledge. This disparity creates cultural resentment far deeper than a mere organizational chart change. Employees feel they no longer own their expertise; they are merely tenants in a knowledge ecosystem they cannot inspect or audit.. Find out more about philosophical risk of proprietary AI platforms.

For stewardship to survive, we must break this elitism. This aligns with predictions for 2026 KM, which stress the need for a human-centric focus where employees can validate AI recommendations and challenge assumptions.

From Management to Stewardship: Recalibrating Accountability

The entire logic of this consolidation—outsourcing coordination and information handling to AI—has profound implications for management itself. As AI systems become increasingly capable of execution monitoring, routine analysis, and coordination, the traditional middle manager role, built on managing information flow, begins to lose its purpose.

What survives, and what leadership must pivot toward, is stewardship. Stewardship is about defining purpose, setting boundaries, and carrying accountability, not coordinating schedules.. Find out more about philosophical risk of proprietary AI platforms guide.

The Board’s role, too, must evolve. Instead of micromanaging the process, they must focus on outcomes, risk appetite, and ensuring a robust data governance framework is in place to handle the complexities of AI liability and data permanence. True corporate stewardship today means understanding that the value isn’t in the *efficiency* of the output, but in the *resilience* and *originality* of the underlying thinking.

Assessing the True Cost: Innovation Velocity Over Quarterly Accounting

The final, most critical evaluation of this monumental change hinges not on the immediate operational cost savings or the *perceived* efficiency gains—those are easily measured on a spreadsheet. The true ledger must account for the long-term impact on innovation velocity and the overall intellectual richness sustained within our technical workforce. This metric, crucial for long-term survival, defies simple quarterly accounting.

The Efficiency Trap: Measuring the Wrong Things. Find out more about philosophical risk of proprietary AI platforms tips.

The shift in focus, prioritizing measurable efficiency (headcount, timelines) over intangible gains (learning velocity, institutional memory), is an old trap dressed in new AI clothing. When output looks adequate in the short term, but depth, resilience, and originality suffer, we are optimizing for decline. This tendency to optimize for harvesting over renewal is the executive disease of short-termism.

How do we measure this hidden cost?

- Innovation Velocity: Track the time from a major external scientific breakthrough (identified via non-AI methods) to the first internal patent application or radically new product concept derived from it. A stagnant lag signals a homogenization problem.

- Critical Thinking Quotient (CTQ): Implement anonymized internal evaluations that test the workforce’s ability to reason through complex, novel problems *without* immediately defaulting to the AI’s first answer. This requires new metrics that link culture to performance.. Find out more about philosophical risk of proprietary AI platforms strategies.

- Knowledge Path Diversity: Monitor the variety of external sources cited or utilized by successful projects over time. A shrinking diversity indicates the AI’s echo chamber is tightening.

- Formalize The ‘External Feed’: Create a highly funded, distinct channel for external, curated expertise that bypasses the AI’s primary synthesis layer. This must be managed like an independent venture capital arm, tasked with funding the ‘contrarian’ and the ‘unproven.’

- Invest in AI Fluency, Not Just Prompting: Organizations must upskill employees not just on *how* to prompt the AI, but on *how the AI works*, its limitations, and its training data provenance. This combats overreliance and fosters agency.

- Establish a ‘Right to Unlearn’ Policy: Address the growing legal and ethical challenge of data permanence in trained models. Transparency regarding what knowledge, once entered, can never be fully purged is a necessary component of building transparent, trust-based relationships with employees and regulators.. Find out more about Strategic divestiture impact on employee culture definition guide.

- Reaffirm Tacit Knowledge Value: Actively seek out and codify the wisdom gained from the unit that was divested. Assign senior personnel to interview departing staff, focusing on heuristics, gut feelings, and decision rationale—the information that is most vulnerable to AI capture and subsequent homogenization. This is about valuing the explicit documentation of *tacit–explicit knowledge dynamics*.

Successful organizations in this new era are those that create balanced human-tech systems that enhance rather than replace human capabilities. They invest in continuous learning and development, recognizing that talent retention is contingent on creating meaningful work that leverages new technology, rather than being replaced by it.

Practical Counsel: Fortifying the Intellectual Foundation

The goal is not to dismantle the Skilling Hub—that ship has sailed. The goal is to build a sturdy intellectual moat around it. This requires intentional action today, January 19, 2026, to secure our long-term standing.. Find out more about Philosophical risk of proprietary AI platforms overview.

Actionable Takeaways for Corporate Stewards:

Conclusion: The Weight of Accumulated Wisdom

The strategic divestiture was a decision made in the language of quarterly accounting—a language that rewards subtraction. The long-term assessment, however, will be written in the vocabulary of innovation velocity, cultural resilience, and critical thinking. We moved knowledge management from a distributed network of human experts to a centralized computational oracle. This shift has successfully optimized for speed, but it has introduced an existential risk: the quiet death of diversity of thought.

The campus legend—the building too heavy with knowledge to stand—was a metaphor for inefficient, unindexed accumulation. Our current risk is the opposite: a building that looks sleek and weightless but contains only homogenized, polished echoes of the past. True corporate stewardship now means accepting that efficiency is a treadmill, not a destination. Our primary duty is to ensure that the intellectual richness of our workforce—the very thing that allows us to invent the future, not just process the present—is not filtered out by our own predictive models.

This requires leaders to be deeply skeptical of tools that promise to solve knowledge complexity by simply hiding it. We must champion the difficult, slow, and necessary work of critical friction. The future of our competitive edge rests on valuing the hard-won insight over the instantaneously generated summary.

What is your organization doing today to actively fight against the intellectual homogeneity creeping in from centralized intelligence? Are you investing in the next generation of critical thinkers, or merely perfecting the prompts? Share your thoughts on how to govern knowledge when the knowledge itself becomes a black box.

For those wrestling with structuring knowledge transfer in this new environment, understanding best practices for creating resilient content foundations remains paramount. You can review recent insights on APQC Knowledge Management Trends for 2026 to compare your internal strategy against industry benchmarks. Furthermore, to understand the philosophical stakes of control over knowledge infrastructure, a review of commentary on corporate capture of knowledge is highly recommended, especially concerning publicly funded research and proprietary systems, as discussed on Schneier on Security. Finally, anyone building governance must study the growing risk landscape documented by the MIT AI Risk Repository to properly integrate human agency risks into your oversight protocols.