From Advantage to Acceleration: The Qubit Scaling Race

The 105-qubit count that just secured quantum advantage feels, in the current rearview, almost quaint. It’s the equivalent of the Wright Brothers’ first flight—a monumental achievement, but miles away from a transatlantic jetliner. The next logical, and frankly, daunting, engineering challenge is to transition from this modest array to systems possessing thousands, or even the ultimate goal of millions, of high-quality, interconnected qubits. This is where the engineering reality hits hardest.

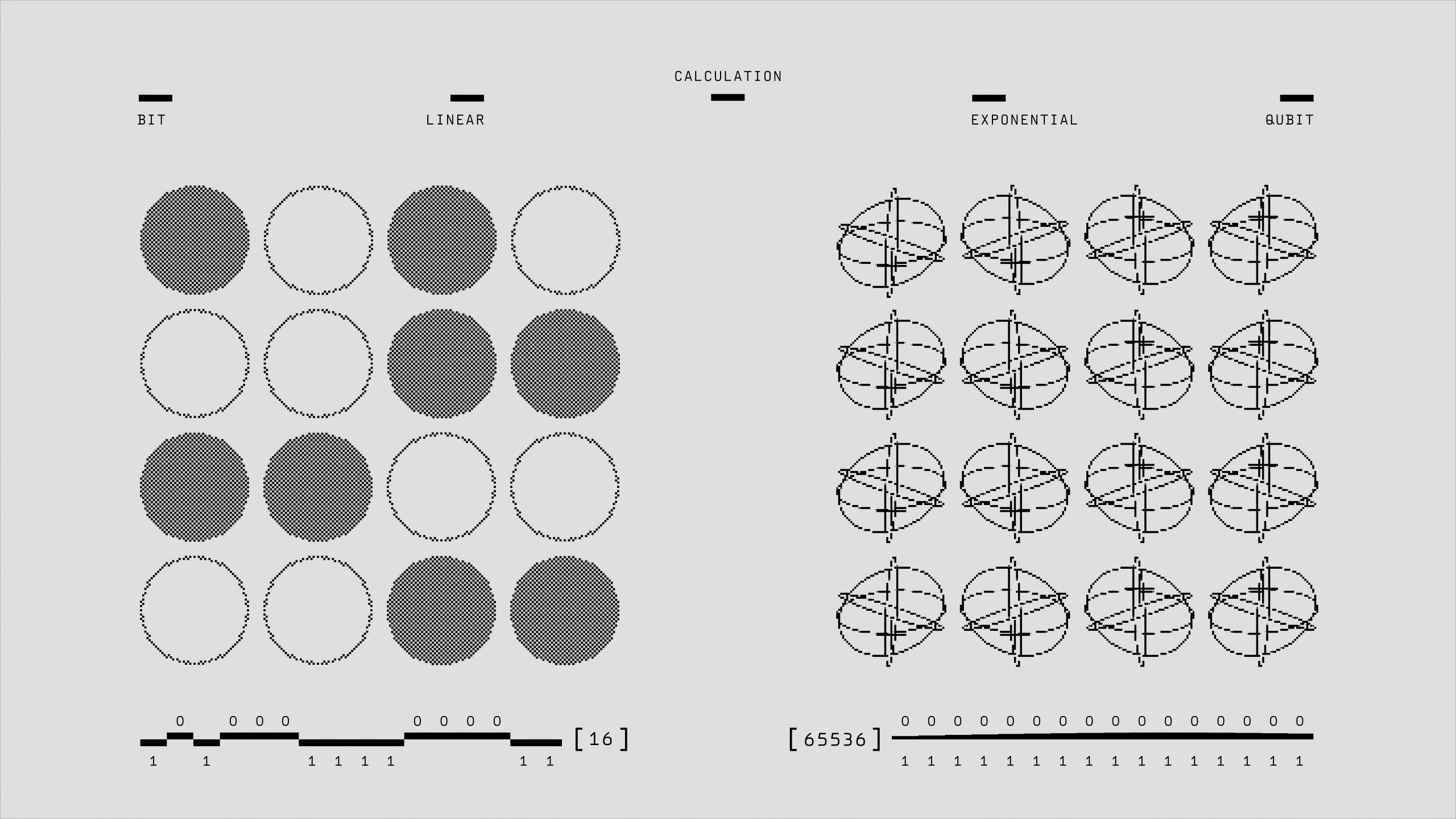

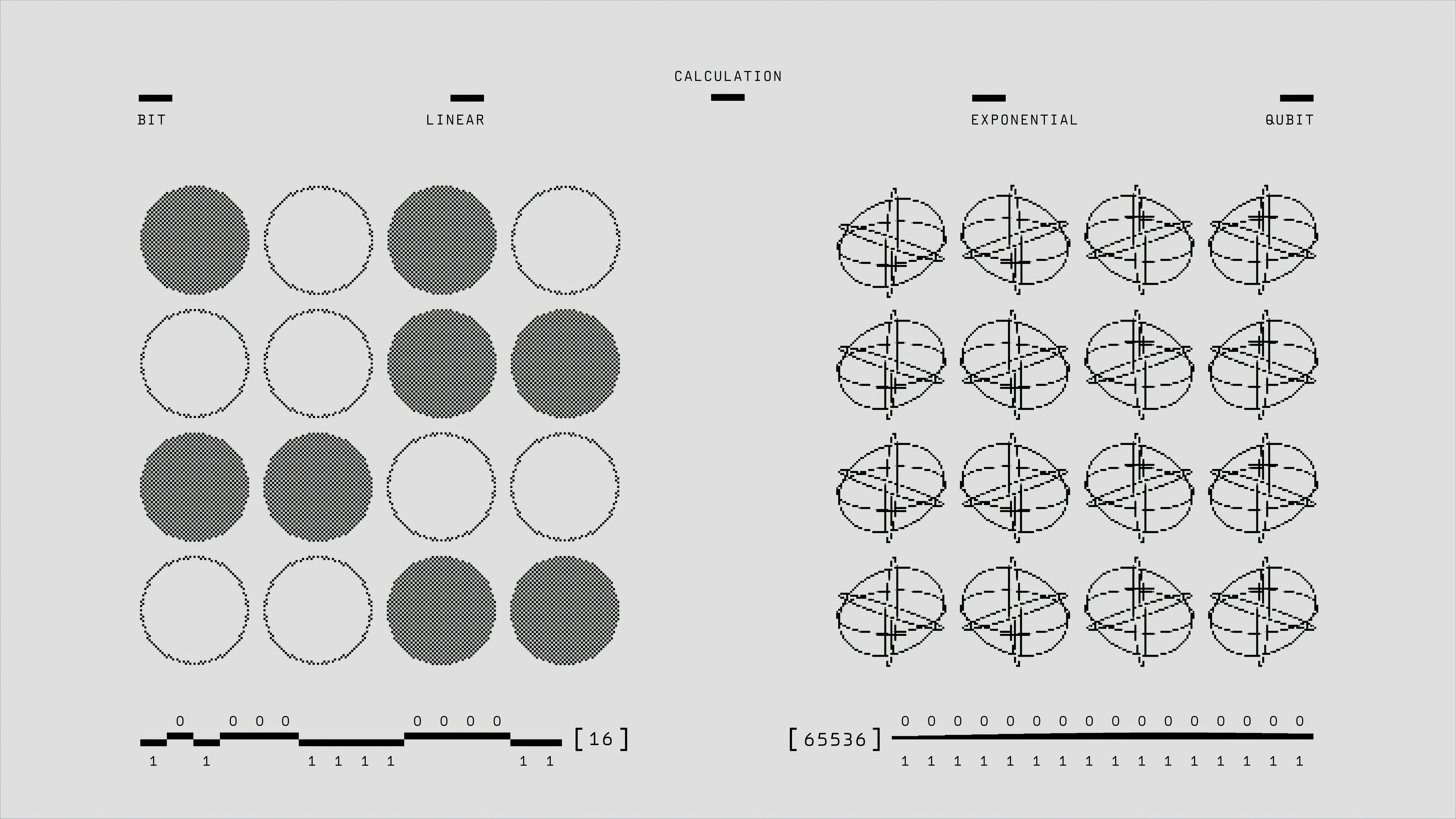

The Iron Law: Scaling Qubit Count While Maintaining Error Mitigation

It’s one thing to keep 105 qubits pristine enough to run a complex, verifiable algorithm like Quantum Echoes cite: 7. The Willow chip managed impressive fidelities—99.97% for single-qubit gates and 99.88% for entangling gates cite: 8—but scaling these fidelity rates down to an array of a million physical qubits while maintaining a low *logical* error rate is a physics problem that is still being actively solved. We need fault-tolerant quantum computing, and that requires a massive overhead of physical qubits to encode a single, reliable logical qubit.

This race isn’t just about ‘more’ qubits; it’s about ‘better’ qubits that talk to each other without constant hand-holding from error-correction protocols. Consider the recent milestone from IonQ, which demonstrated two-qubit gate fidelities of 99.99%—the elusive “four nines” threshold—which they claim drastically accelerates their roadmap to millions of qubits by 2030 cite: 13. This shows the industry is attacking the fidelity problem from multiple angles, which is good news, but the sheer complexity involved in coordinating that many physical components—whether they are superconducting transmon qubits, trapped ions, or photonic systems—is immense.

Actionable Insight for the Near-Term Thinker: Don’t get lost in the qubit count hype. The real value right now is in mastering quantum error correction. Companies that can demonstrate robust, long-lived logical qubits—the next explicit milestone for Google cite: 8—will be the ones building the foundations for the next decade. Focus your attention on how organizations are managing logical qubit stability, not just physical count.

Establishing the Next Generation of Quantum Benchmarks

The “Quantum Echoes” test, rooted in measuring the out-of-time correlator (OTOC) to model physical phenomena like molecular structure cite: 12, has served its purpose beautifully. It proved that we can do *something* verifiable faster than classical machines, moving the field past the less-demanding “Random Circuit Sampling” benchmark that Willow previously tackled cite: 8. But the game has changed.

The new era demands benchmarks that are:

We are entering a period where the benchmark *is* the application. Instead of a simple synthetic test, the most trusted metrics will likely emerge from highly specific scientific simulations where the answer is *expected* to be difficult to calculate classically. The contest will shift from “Who has the most qubits?” to “Whose qubits can solve a problem that actually matters with the highest precision?” This competitive measurement evolution is crucial for directing the engineering efforts required to reach the million-qubit mark.

For internal site navigation, this shift in testing methodology is something you can track by following our deep dives into quantum algorithm development trends for 2026, where we analyze which specific simulation targets are replacing the older synthetic tests.

The Power Paradox: Quantum Computing’s Energy Footprint

This is where the conversation takes a fascinating, almost philosophical turn. The “two titans” (alluding to the major tech and quantum leaders whose recent exchange sparked this discussion) didn’t just talk qubits; they touched upon the existential resource underpinning all technological progress: energy. The sheer computational power being unlocked by quantum computing—and its necessary partner, Artificial Intelligence—is coming at a time when global energy demand is rocketing toward unprecedented levels.. Find out more about Scaling quantum technology complex problems guide.

It’s easy to think quantum computers are “green” because they are small. In the lab, a 256-qubit system like QuEra’s Aquila consumes less than 10 Kilowatts, a fraction of the power used by the fastest classical supercomputers, which can easily chew through over 11 Megawatts cite: 6. That initial energy efficiency argument holds true for *today’s* quantum machines performing narrow tasks. However, that’s not the whole story for the future landscape.

The “grand technological futures,” such as building out immense quantum data centers or operating the massive computational clusters required for genuine artificial general intelligence (AGI) powered by quantum methods, will require energy infrastructure on a national scale. Furthermore, the classical components—the control electronics, the massive memory banks, the cryogenic cooling apparatus for scaling superconducting systems, and the classical support structure for hybrid quantum-classical computation—will all scale up in complexity and power draw.

We must acknowledge the sheer power demands of the infrastructure that surrounds the quantum processor. Next-generation data centers, built to support these massive hybrid workloads, are already pushing power densities toward 80–100 kilowatts per rack—five to ten times that of conventional deployments cite: 20. If the world is set to achieve net-zero targets, we cannot afford to solve our informational problems by creating a catastrophic energy problem. The two challenges are inextricably linked.

The Energy Equation: Data Centers, AI, and Megawatts

The problem scales beyond just the quantum hardware itself. As AI workloads continue to fuel the data center boom—with some facilities approaching 200+ megawatts of capacity cite: 20—the need for resilient, sustainable power becomes paramount. This isn’t just about being green; it’s about grid stability and cost management in an era of resource competition.

This context underscores a critical reality: while quantum computing is solving complex informational problems (like modeling new battery chemistries!), the realization of these grand futures—like the proposed space-based clusters or planetary-scale simulations—relies on solving the most basic, terrestrial challenges. Global energy provisioning through vastly scaled renewable sources is not a side project; it is the non-negotiable prerequisite for the next quantum leap.

The Necessary Foundation: Solar Energy as the Quantum Engine. Find out more about Scaling quantum technology complex problems tips.

If quantum computing is the brain of the future technological civilization, then renewable energy—specifically solar—must be its robust, inexhaustible heart. The market sentiment in October 2025 reflects this urgency: the clean energy sector’s stocks have surged significantly year-to-date, often outpacing the steady growth of semiconductor giants cite: 19. Capital is following strategic necessity.

The advancements here are as rapid as those in silicon and entanglement. We are seeing a move past basic rooftop panels:

For any large-scale computational effort—whether classical AI training or a future quantum cloud—the strategy now involves building infrastructure near strong renewable sources or integrating on-site generation cite: 15. It’s a symbiotic relationship: quantum computing might help discover the next generation of solar cell materials, but solar energy is what will power the quantum computers that make those discoveries.

If you want to see how this plays out in real-time, check out the latest projections on global solar capacity additions for the remainder of 2025.

The Next Hurdles: Integration, Workflow, and The Human Factor. Find out more about learn about Scaling quantum technology complex problems overview.

Achieving quantum advantage is fantastic, but it is useless if the results cannot be woven into the existing fabric of scientific and business workflows. The post-advantage era is fundamentally an integration challenge, not just a physics challenge.

Bridging the Computational Divide

The real work for the next five years, as suggested by some industry observers, involves making quantum computers indispensable partners to classical systems cite: 5. This means perfecting the software layer that decides which parts of a massive problem get sent to the delicate quantum processor and which parts remain on the robust classical CPU/GPU clusters.

This requires several developments:

Practical Tip: Start Hybridizing Your Strategy Today

If your organization is waiting for a million-qubit machine before engaging, you are already behind. The actionable advice for any technologist or strategist today is to begin mapping your most intractable optimization, simulation, or machine learning challenges onto a hybrid framework.

Think of it this way:

This methodical, three-step approach ensures that when the hardware catches up to the million-qubit dream, your team already has the software and workflow infrastructure ready to deploy it instantly.

Conclusion: Engineering Scale is the New Frontier. Find out more about Integrating quantum computing into existing workflows insights guide.

The verifiable achievement of quantum advantage, marked by the **Quantum Echoes** test on the Willow chip, confirms that we have crossed a threshold: quantum computation is no longer purely theoretical. As of October 23, 2025, the focus is relentlessly forward, defined by two immense, intertwined engineering objectives. First, the hardware must leap from hundreds to millions of qubits, a feat requiring fidelity rates that sound like science fiction today. Second, this computational expansion must be supported by an equally vast and radical transformation in our fundamental energy infrastructure, with utility-scale solar power leading the charge.

Key Takeaways for the Post-Advantage World:

The next chapter of computing won’t be written solely in superconducting circuits or trapped ion traps; it will be written in the high-efficiency photovoltaics and grid storage solutions that keep the entire endeavor alive.

What do you think is the bigger bottleneck in the next five years: achieving million-qubit stability or securing the dedicated terawatt-scale renewable energy required to power them? Share your thoughts in the comments below!