Navigating Hurdles and Charting the Trajectory for Widespread Commercialization

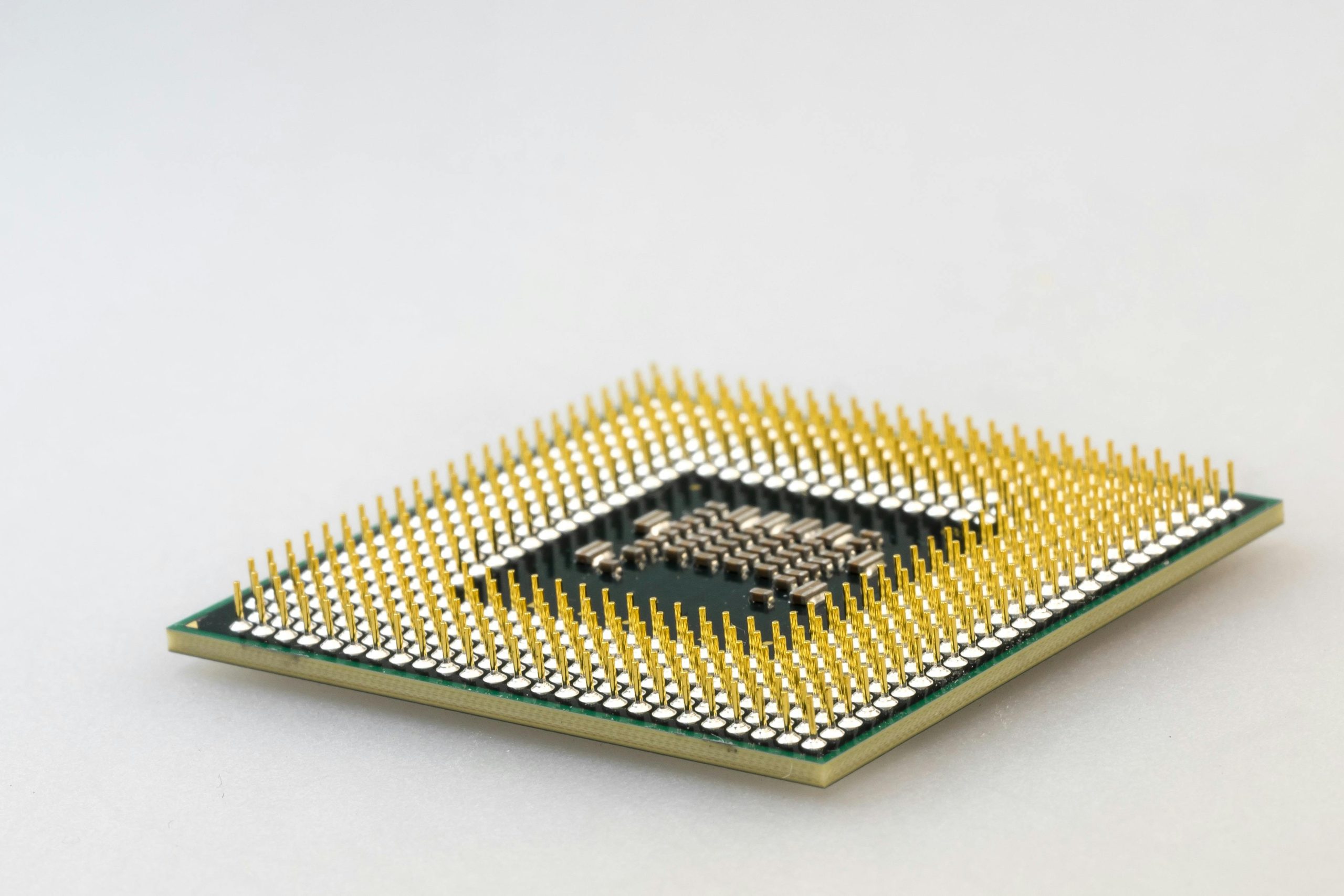

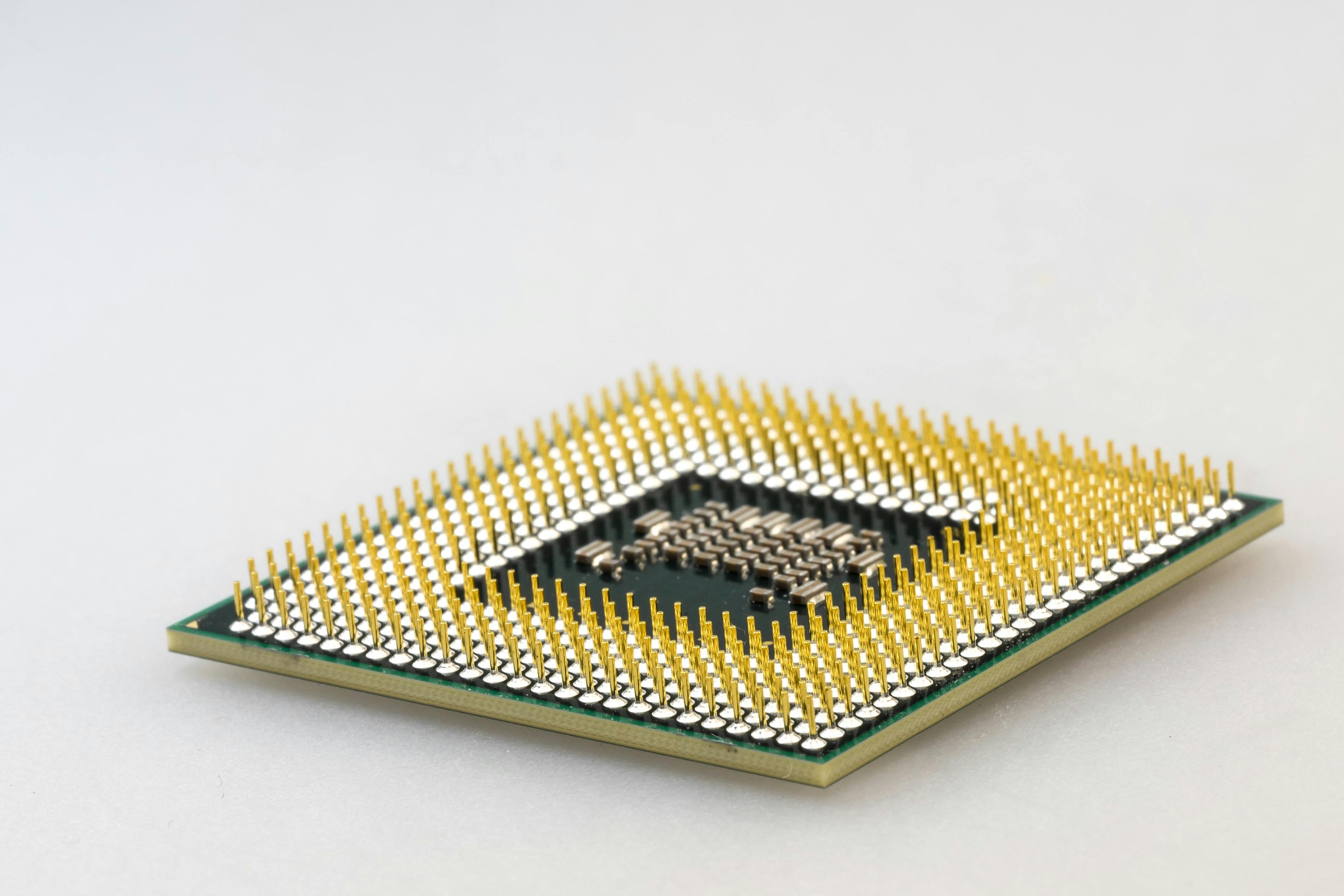

No foundational technology is perfect upon first demonstration. The path from a validated, high-performing *macro* to a widely deployed, standardized *System-on-Chip (SoC)* is paved with engineering challenges. Acknowledging these hurdles is crucial for understanding the technology’s realistic trajectory.

Addressing Challenges in Multibit Operation and Analog-to-Digital Conversion

The core strength of CIM—performing analog summation within the memory cell—is also its most delicate point. The initial computation relies on summing analog electrical currents. The fidelity of the final result depends entirely on how accurately this analog signal is measured and then converted back into the digital domain.. Find out more about Spintronic digital compute-in-memory macro.

The current focus for refinement is centered on two areas:

This is where ongoing research in digital CIM must refine the peripheral circuitry to handle the inherent variation of the analog domain, even as the digital control logic surrounding it becomes more advanced.

The Path Towards Larger Scale Integration and System-Level Optimization

The reported macro is a critical building block—a specific, validated unit demonstrating the feasibility of the entire concept. To achieve true industry impact, this unit must be integrated into something much larger, like a multi-core SoC. This requires overcoming significant system-level hurdles:. Find out more about Spintronic digital compute-in-memory macro tips.

The journey from this validated macro to a standardized, multi-gigabit processing unit involves intensive system-level optimization. It’s a massive undertaking, but the potential rewards—vastly more capable, ultra-efficient, and physically secure edge AI—make this trajectory the most important one in specialized computing today.. Find out more about Spintronic digital compute-in-memory macro strategies.

Conclusion: Actionable Insights for the Next Wave of AI Deployment

The development of this spintronic CIM macro, with its industry-leading TOPS/W, nanosecond-level latency, and integrated hardware security via SRR-PUF and 2DHC-PE, signals a fundamental shift in what is possible for real-world AI. The key takeaway for architects, researchers, and engineers is this: the future of compute is localized, efficient, and inherently trusted.

Key Takeaways and Actionable Insights. Find out more about Spintronic digital compute-in-memory macro technology.

To successfully leverage this next generation of hardware, focus your planning around these actionable points:

This technology forces us to re-evaluate our assumptions about power and trust at the edge. The question is no longer *if* we can deploy complex AI everywhere, but *how* we will secure and power it.

What are the biggest security blind spots you see in your current edge AI deployments? Share your thoughts on how hardware-embedded security like PUFs will change the risk landscape in the comments below!

For more in-depth analysis on the current state of silicon efficiency, check out the latest industry reports on high-performance computing efficiency goals and the ongoing work in system-level optimization for AI workloads.